Like a lot of SaaS companies, the CloudZero engineering team uses our own product — or as many would say, we “eat our own dog food” and “drink our own champagne.”

So, when we built and released our cost per customer functionality last year, it was only natural that we were our own guinea pigs.

Today, I use our cost per customer reports and cost intelligence data all the time, especially when it comes to answering important questions that the rest of the leadership team needs — like when our CEO needs data to present to the board or our CRO wants to understand our margins on different customers at renewal time.

Cost per customer metrics are incredibly valuable — but I know from speaking with peers, they’re very challenging to obtain on your own.

Multi-tenant architecture and Kubernetes (whether you’re running your own or using a managed service like EKS) can obscure the alignment between the infrastructure you’re paying for (e.g., your EC2 instances) and the customers who utilize that infrastructure.

In my experience, many organizations end up slicing data in spreadsheets and taking their best guess.

For those of you who are thinking about embarking on a project to get to accurate, granular cost per customer metrics — here’s a little bit about how we do it on our team.

I’ll walk through how our cost per customer functionality works, the metrics we care about, some surprise learnings we gleaned along the way — and generally how we “practice what we preach” (last one, I promise).

Why Cost Per Customer Data?

First of all, you might be wondering why this data is so important to us.

SaaS businesses are kind of unusual. A customer purchases a product and starts using the platform. Depending on any number of variables (e.g., their utilization patterns or their amounts of data), the cost to support that cluster can vary significantly.

Without understanding what your customers cost, as well as which aspects of your product those customers are using to drive those costs, you’re flying blind and potentially putting yourself at risk to be surprised by higher costs than you expected.

In my mind (and I think our CEO would agree), the most exciting thing about cost per customer data is not really about saving money — it’s about the strategic decisions that can be made to maximize our margins and strengthen our business.

As a growing company, we’re constantly reassessing where it makes the most sense for us to spend our time and energy — and how to optimize our sales and go-to-market processes.

For example, let’s say, hypothetically, that our mid-market customers have twice the margin as our enterprise customers. Our leadership team can couple that information with metrics like customer acquisition costs (CAC) and make some really informed decisions about which kinds of companies we should target.

That’s powerful.

How It Works

So, how do we get this granular cost per customer data?

Let’s start with how our platform works. I’ll also cover some specific CloudZero terms we use and what they mean, so you can easily follow along.

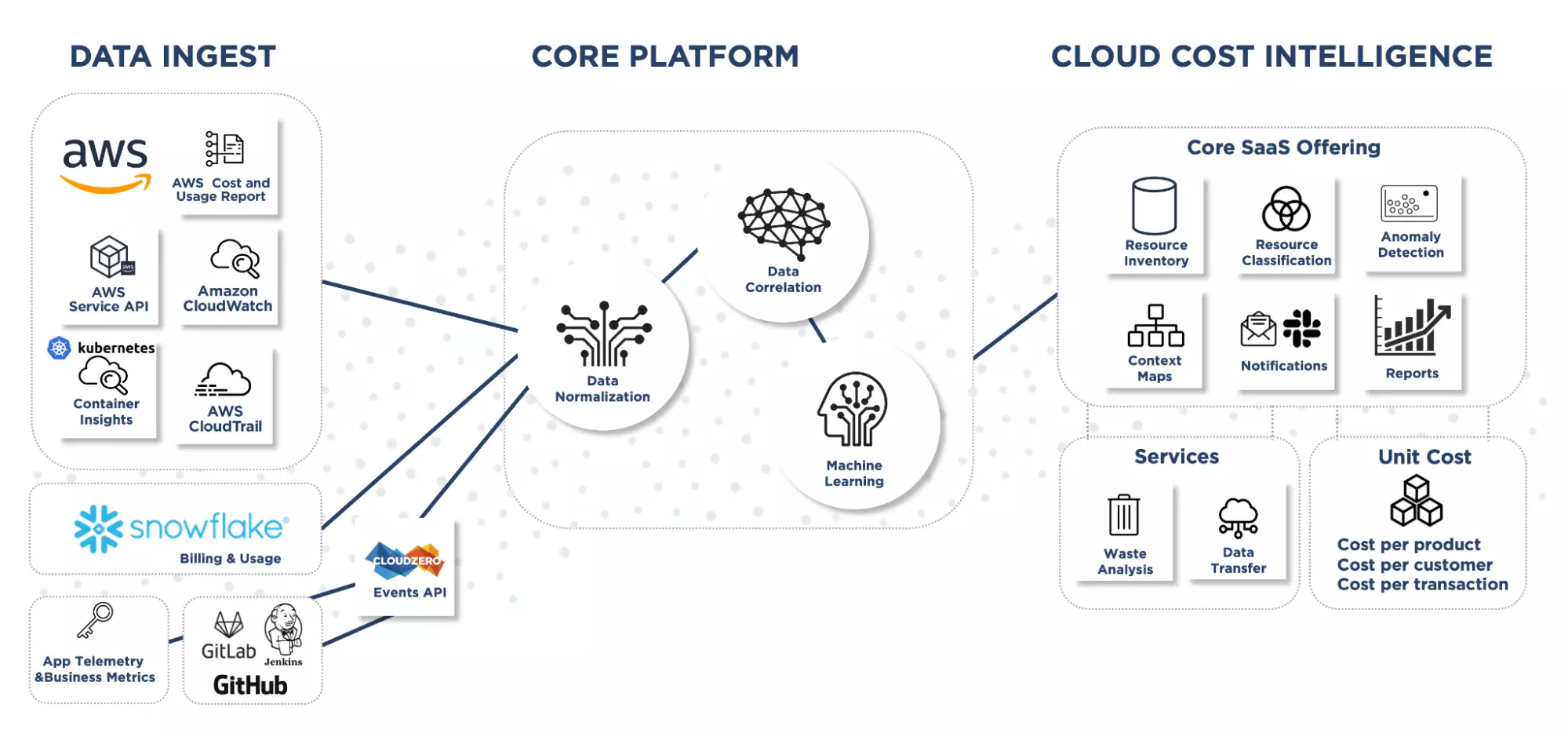

One of CloudZero’s main differentiators is our ability to take huge amounts of different data and disparate data streams and join them together in a way that’s performant and gives you new insights. You can read more about that here if you’re interested in why our platform is designed the way it is.

We’re also not just pulling in data from your cost and usage report (CUR) like traditional cost management platforms. We’re built more like an observability platform — so we pull in lots of data and metadata from your environment to really enrich your billing data and give you context about how your cost connects with your architecture and events (like your deployments or your Black Friday sale).

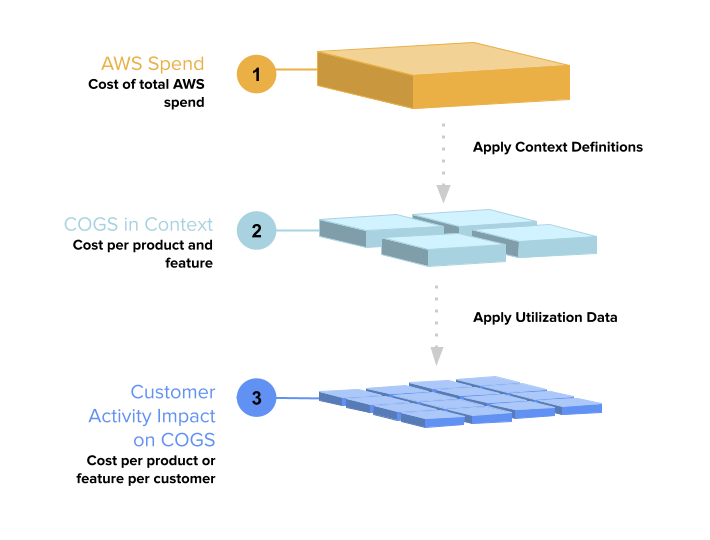

For cost per customer insight, we take all of that rich COGS data and apply two additional layers of context. Here’s a simple diagram to visualize how we think about it, which I’ll explain in more detail next.

Layer 1: COGS in Context

The first step we take with every customer is to allocate your costs to different groups that align with however you think about reporting on COGS. There are all kinds of different dimensions and variations that customers track, such as environment, dev teams, geographical region, etc.

For most companies we work with, the most basic first step is to organize cost by products and features, then they build on it from there by adding more. Also unlike other cost tools, we’ve designed a simple and flexible way to group your costs into whatever context that makes sense to your business — and we don’t rely just on tags.

Instead, we use what we call “context definitions”. They’re called definitions because you’re essentially defining (or setting some logical rules) to group your cost. We can base this off of all kinds of data, including your tags, service metadata, Kubernetes clusters, and more. We use a domain-specific language that’s stored in a YAML file, so it’s really just like any other infrastructure as code component.

During this process, you’ll work with a CloudZero cost expert who will walk you through the process, help you figure out which data to use, and will build the initial context mapping for you. The whole process usually only takes an hour or two of time — and at the end you’ll be able to see your COGS in context.

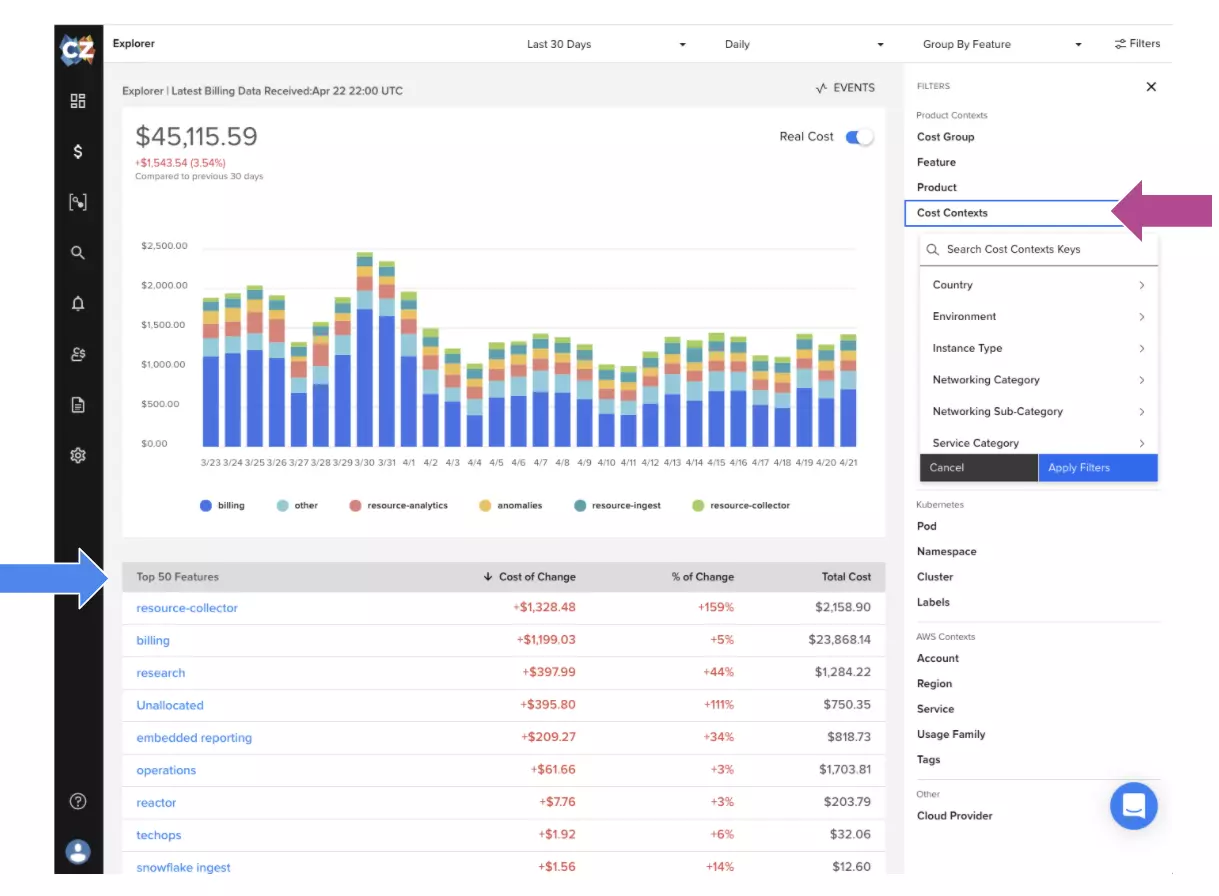

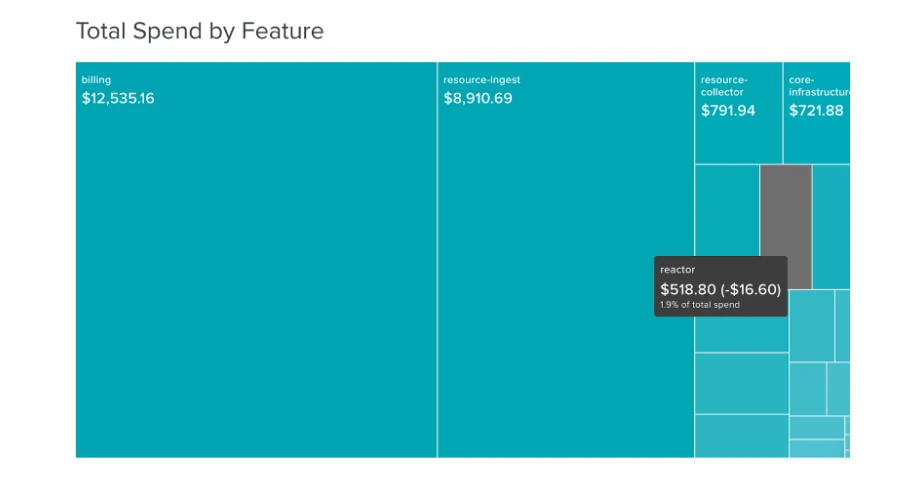

Here’s what it looks like when it’s done:

If you look at the blue arrow, you’ll see a partial list of our product features. You might notice “Billing” is the most expensive part of CloudZero. That’s our billing ingest process, which pulls in and processes all of our customers’ data. The sheer amount of data we’re pulling in is huge — and if you’ve been working in the cloud for a while, you know these kinds of processes aren’t cheap!

If you take a look at the purple arrow, you’ll see some of the other contexts you can filter on or group by, like environment. This context helps us separate production from R&D spend, which is necessary to calculate COGS.

Layer 2: Customer Activity Impact on COGS

Once your costs are organized into all of the COGS metrics you care about, you now have the option to take it a step further — and apply customer utilization data to the COGS metrics you defined in Layer 1.

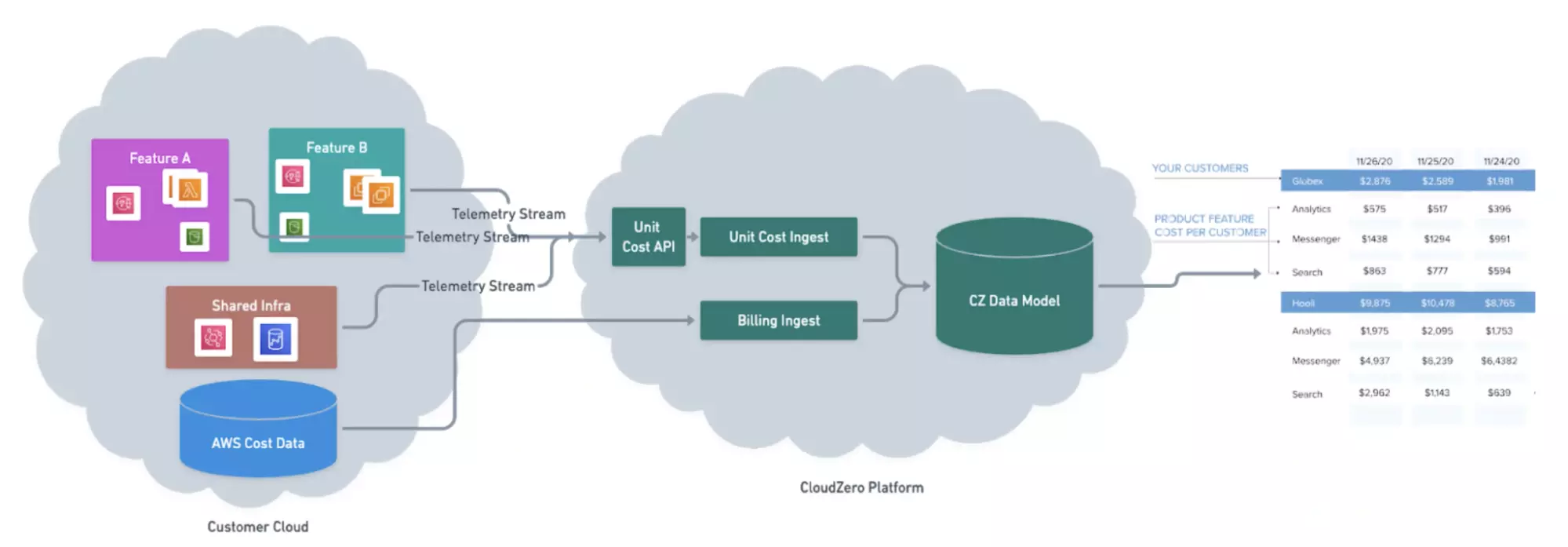

Here’s a marketecture diagram of what the process looks like:

Your running application produces what we refer to as a telemetry stream. Telemetry streams can take on a lot of different forms, but in CloudZero terms, it’s a set of records that tells us about usage that are sent to the CloudZero platform via an API.

For example, it might show us “Customer 1 used X units of a feature A and Customer 2 used Y units of that same feature, but also Z units of feature B.”

We take each of those telemetry streams and we normalize over an hour or a day, depending on the granularity you want, and then we join it with your billing model. We apply simple logic like this:

We know:

- Feature A costs $10,000 per day.

- Customer 1 used 1% of the total usage of that feature yesterday.

Therefore:

- Customer 1’s utilization of Feature A costs you $100 per day.

Except instead of a basic equation, we do this across a rich, complex data set to give you granular customer utilization and cost data.

The whole process is pretty simple and can take anywhere from a couple of hours to one working day for one engineer to send in a telemetry stream, so it’s not a huge lift — and we’ll help you figure out what telemetry makes sense for your business.

How We Chose Telemetry Streams for CloudZero

When we started the process of measuring CloudZero’s cost per customer, we had already organized our cost into the COGS metrics we cared about, but needed to figure out which telemetry streams would help us apply utilization data.

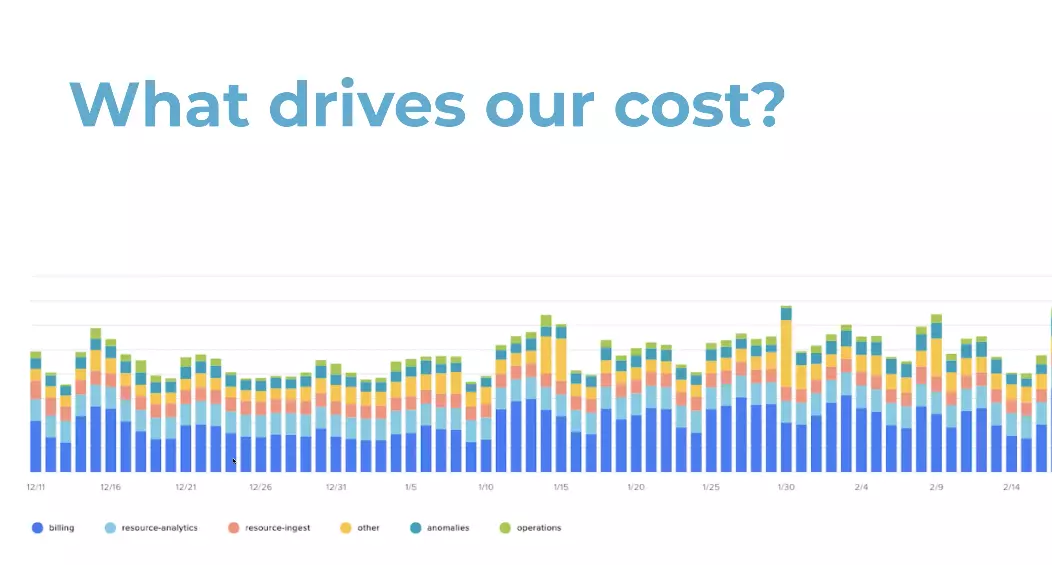

The question we asked ourselves was, “What’s driving our cost?”

As I mentioned earlier, “billing” is our most expensive feature. I’m a big fan of a “crawl walk run” approach, so we decided to just take a look at our billing feature, figuring it’s probably pretty representative of the whole platform.

Pictured: CloudZero’s cost per product feature over time

We took a step back and asked ourselves what would be a good proxy for usage.

Well, the way our platform works is we pull in billing line items and process them. Since we have a serverless architecture, we knew that every second of computation time costs a certain amount. So, we decided to measure the time it takes for every job.

The telemetry API has a simple JSON format, which we make available to each of our customers. Each time we ingest data for a customer we create a record of usage for that customer and send it along to the API.

After we got the data flowing for billing, we sunk in three more telemetry streams to provide us with data about our next three most expensive features. Each had a slightly different variation. For example, for our “anomalies” feature, we used a count of how much time it took calculating models and running those models for each customer every day.

If you’re doing this for your environment, there are a number of ways you can get this data – and one of our experts will walk you through it.

CloudZero Cost Per Customer Report

Now, for the exciting part — what does this data actually look like in practice?

We use the built-in CloudZero Cost Per Customer report. Some customers who use this functionality choose to pull the data into their own business intelligence or monitoring tool for more flexibility or so they can combine it with revenue data — but the report gives a great overview for most.

The first key part of the report is our average cost per customer. We’re a growing SaaS company that’s onboarding new customers all the time – so it’s a good thing if our bill is going up!

However, we also want to ensure that we don’t grow our cloud cost in a way that’s going to hurt our margins. Instead, we want to work toward economies of scale. This section helps me track that.

You might notice we have a little spike in Mid-March. We onboarded a new customer whose data had a much higher cardinality than we had seen previously, so we temporarily scaled up some of our database warehouses to ensure good performance.

This came with an additional cost. Luckily, because of this report and our cost anomaly alerts, we noticed it right away and were able to do some work to bring it back to normal within days.

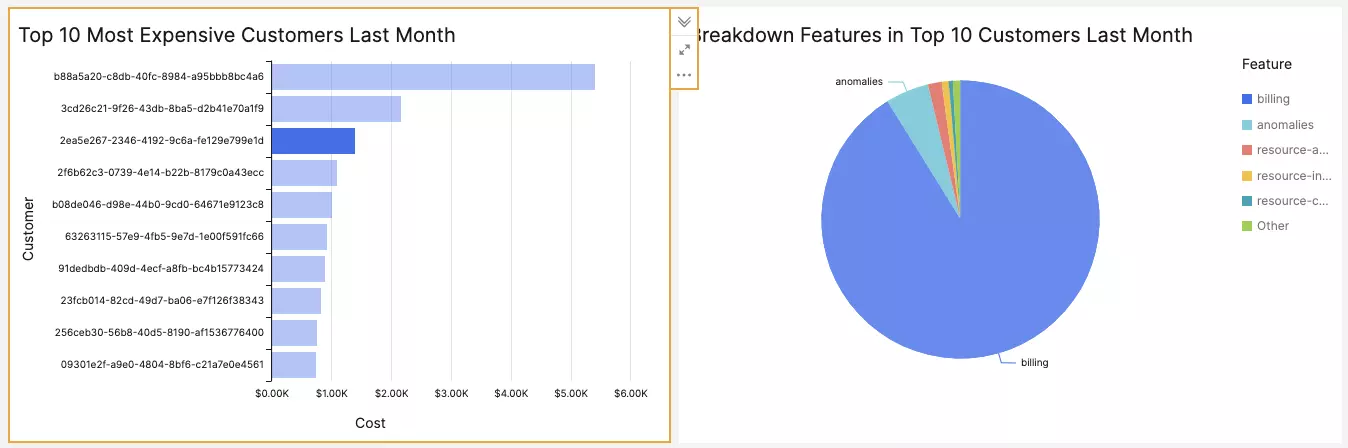

The next part of the report shows us the cost of our individual customers. We’ve changed all of their names to different codes for privacy reasons, but each line and box on the treemap represents a distinct customer.

I can actually click into any of them (notice how the dark blue is highlighted on the left) and it will show me the breakdown of their cost per feature on the right.

This is a great way for us to understand exactly how much it costs to support each of our different customers, ranging from small start ups to large enterprises.

Here’s an example of how it helped us in the last year.

We started a proof of concept with a pretty large company — and they quickly became our second most expensive customer because they had so much billing data!

I was able to share the daily cost to support that customer with our revenue team. As a result, they were able to price their contract appropriately to ensure their margins matched our expectations.

Additionally, our sales engineering team was conscientious to turn off their data flowing into the platform during the period of time when the customer had completed the proof of concept and was still processing the contract, to ensure we weren’t spending money when the customer wasn’t even looking at their data. It was a great example of how access to cost data makes everyone more cost-conscious.

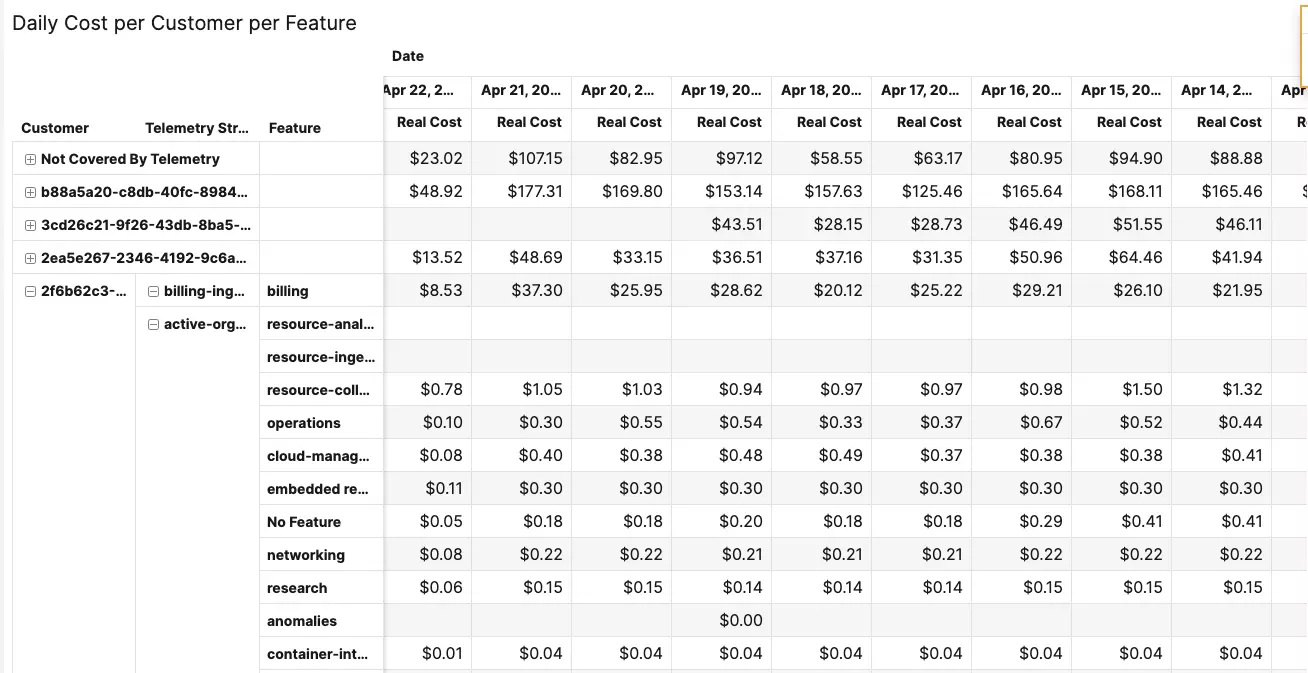

The last part of the platform shows raw daily data of cost per customer per feature. This is where our utilization data really shines. I can export this data to use in our internal BI tool to do explorations and/or create presentations.

One of the ways this can be helpful is to understand how different segments of the market are utilizing our product differently. Let’s say, hypothetically, we found that small customers are using our built-in report, while enterprises heavily utilize our API to consume their data in a different tool. If utilization of our API was disproportionately expensive, we might consider pricing our enterprise packages differently.

I know this data will be critical as we continue to grow and evolve as a company.

Interested In Learning More?

If you’re interested in learning more about how CloudZero cloud cost intelligence can help you measure your cost per customer, request a demo today. The process is simple and expert-led, so you can gain granular visibility into your cost, whether you’re a cost expert or not.