The first person to coin the term “Artificial Intelligence” predicted that someday people would buy software as a utility. The year was 1961 and that person was Professor John McCarthy, a computer scientist at Stanford University.

After Salesforce began selling software programs this way in the late-1990s, the good professor witnessed his prophecy come true for over 12 years before his passing in 2011. Yet this is only one aspect of computing in the cloud as we know it today.

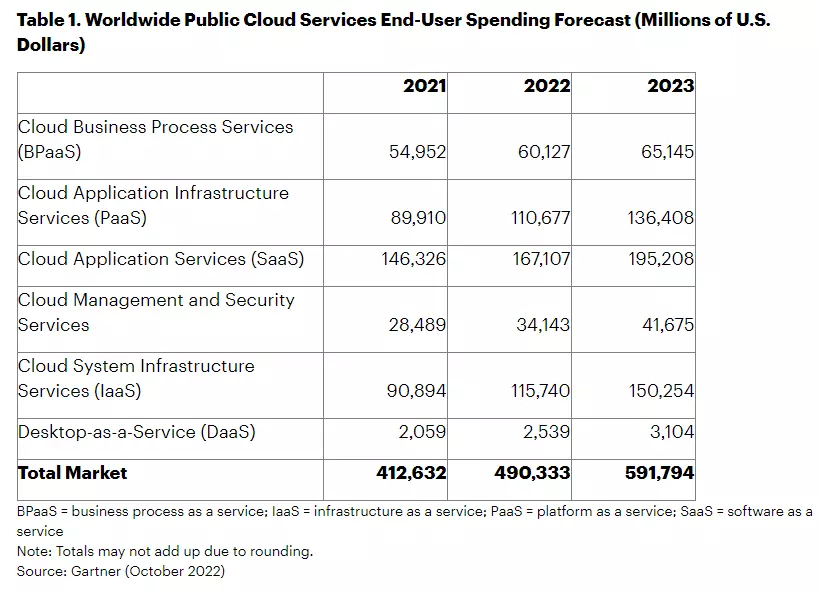

Gartner predicts that worldwide end-users will spend $592 billion on public cloud services alone in 2023.

Now let’s take a look back at where it all began, where it’s heading, and some powerful cloud technology trends to watch. We’ll begin by defining the cloud.

If you’d rather listen to how the cloud started, “Cloud Atlas: How The Cloud Reshaped Human Life”, is a podcast telling the story of the cloud, from prehistoric times (i.e., the 1990s) to the present day. Find the full podcast series here.

What Is The Cloud?

The Cloud refers to an internet-based network of servers that support computing from anywhere and anytime. Cloud computing is basically the practice of multiple users sharing online storage, databases, and compute power (CPU, RAM, and networking) over the internet.

It is called “The Cloud” because the supporting infrastructure, services, and resources, are located on third-party owned datacenters all across the world — not locally on an end-user’s device.

The cloud eliminates the need for you (the cloud end-user) to purchase, install, and maintain hardware in your on-premises data center. Instead, you rent the computing capacity, use it however much or as little as you need, and pay based on your usage.

As Professor John McCarthy had predicted, the cloud makes buying and selling software as utility possible. Online storage service provider Dropbox is a good example of an online-storage-as-a-service platform.

Explore more about the four types of cloud delivery models, four cloud deployment types, and more in our What is the Cloud guide here.

When Was the Cloud Invented?

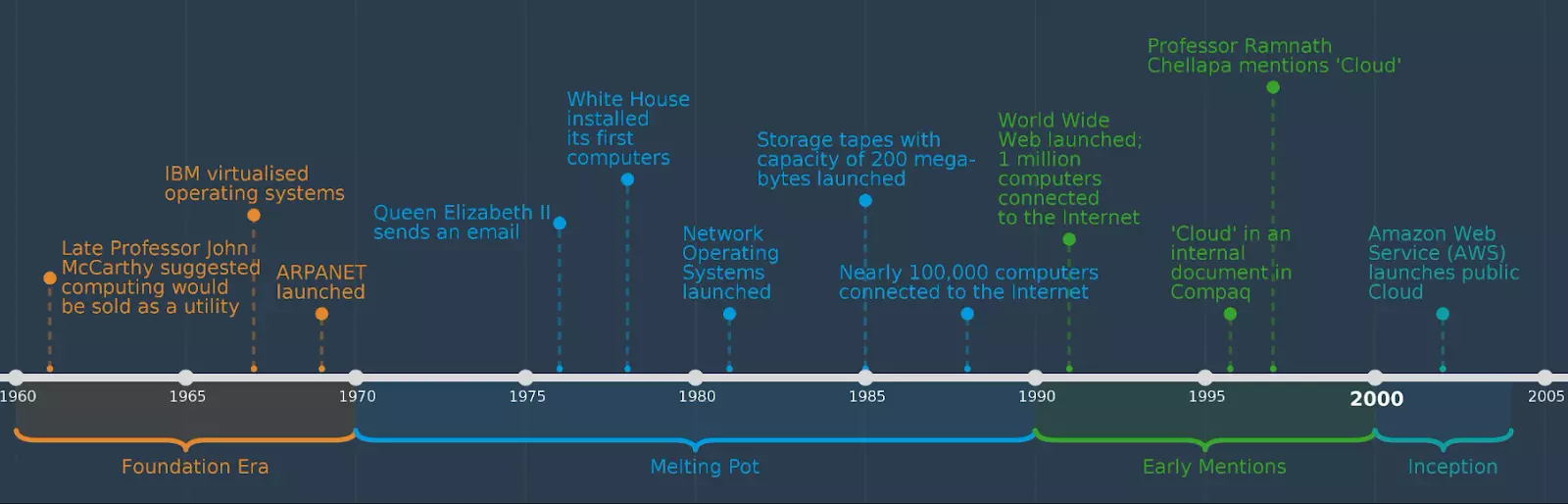

There is debate over exactly when the cloud was born. Three schools of thought point to the 1960s, the early 1990s, and the early 2000s.

Here’s the deal. Today’s cloud relies on technologies that have been refined over 70 years. Here’s a decade-by-decade look at how cloud computing has evolved.

The Beginnings Of The Cloud – 1960s

In the early 1960s, the need to share a single mainframe computer was a hot topic. At this point, mainframe computers are becoming increasingly popular. But they are colossal and prohibitively expensive, so they must be shared to reduce costs.

In 1961, Professor John McCarthy delivers a speech that rings true today.

In that speech, the Stanford University professor says he foresees a time when “computing will be packaged as a public utility just as the telephone system”.

He continues to predict that:

“Each subscriber needs to pay only for the capacity he actually uses, but he has access to all programming languages characteristic of a very large system … Certain subscribers might offer service to other subscribers”

Elsewhere, Leonard Kleinrock writes the paper Information Flow in Large Communications Net. With others, like J.C.R. Licklider, the first-ever director of the Information Processing Technology Office (IPTO), they lay the foundation for today’s World Wide Web.

That got off to a fast start.

Two years later in 1963, the Massachusetts Institute of Technology (MIT) gets $2 million funding from DARPA (Defense Advanced Research Projects Agency). They are to work on Project MAC. The requirement; develop a system that enables two or more people to use a single computer.

Time-sharing

At this point, time-sharing is possible through Remote Job Entry. IBM and the Digital Equipment Corporation (DEC) are pioneering this technology. It is possible to share one computer among dozens of users at the same time.

They use terminals (typically modified teleprinters) for logging in over phone lines. The timesharing computers generally do not connect to each other, but facilitate a variety of features of subsequent networks, including file sharing and e-mail.

The history of the Internet

In 1969, J.C. R. Licklider alongside Larry Roberts and Bob Taylor, pioneer the Advanced Research Projects Agency Network (ARPANET) for the Pentagon. The computer scientist is also promoting his Intergalactic Computer Network vision of a world where computers connect everyone and make information accessible from anywhere.

In October, they lauch the ARPANET, marking the first general-purpose computer network that connects different types of computers together on a large-scale. This is important because it paved the way for the modern Internet, the platform on which cloud computing runs.

To understand how the internet evolved to carry cloud computing on its back, you can learn more about the history of the internet.

The Bubbling Cloud – 1970s

In a 1970 “Mother of all demos” event in San Francisco, Douglas Engelbart and colleagues, funded by ARPA, demo their oNline System.

In ninety minutes, a thousand people marvel at collaborative editing, word processing, videoconferencing, and a computer mouse work, while elements of the NLS system link to other elements through hypertext links.

In 1972, IBM popularizes the concept of virtualization and virtual machines (VMs). With virtualized operating systems, a single host machine can run several virtual machines that each handle various computing tasks independently. Sharing computing resources this way is more efficient and cost-effective than time-sharing.

In 1973, networks are successfully connecting computers. But different kinds of networks cannot connect. The next challenge: internetworking or internetting. Several things are happenningf happen:

- Email moves from time-sharing to computer networks.

- The Ethernet is born as part of Xerox’s PARC.

- CYCLADES (France) and the NPL network (Britain) are exploring internetworking through the European Informatics Network (EIN).

- Xerox uses its PARC Universal Packet (PUP) protocol to connect Ethernets to other networks.

- Both efforts lead to TCP/IP internetworking protocol by ARPA. Vint Cerf and Bob Kahn did the sketches.

In 1974, IBM announces its Systems Network Architecture (SNA). It is a set of protocols they designed to support less centralized networks. This internet-like network of networks is reserved for the SNA compliant. There is a push by DEC and Xerox to develop proprietary networks, known as DECNET and XNS, for commercial use.

In 1977, Bob Khan and Vint Cerf link the ARPAnet, Packet Radio Network (PRNET), and Satellite Network (SATNET), proving their TCP/IP protocol’s efficacy.

A highlight of the 1970s is that personal computers are realized.

The Client-Server Era – 1980s

It’s 1981, and two University of Essex students, Richard Bartle and Roy Trubshaw, write a piece of software allowing multiple people to play against each other on-line. And with that, the first Multi-User Domain (MUD1), also called Dungeon, goes on-line.

Across the Atlantic, France Telecom is offering free Minitel terminals to every customer, establishing the first mass “Web.” There will be tens of millions of Minitel users by 1990 and they will use online services such as email, newspapers, chat, train schedules, and tax filings.

By 1985, data storage tapes capable of holding 200 megabytes are available. There are approximately 100,000 Internet-connected computers by this time.

Hardware components become cheaper and more compact following new developments. Every company starts buying its own hardware and maintaining it on its own. Data centers are becoming less popular.

Robert T. Morris’ worm

In 1988, the 23-year old son of a computer security expert at the National Security Agency (NSA) causes outages for 6,000 to 60,000 hosts for days after sending a nondestructive worm to “”gauge the size of the internet, not cause harm”. This emphasizes network security, a major concern for cloud computing adoption later on.

Client-server architecture enables companies to leverage systems that host, deliver, and manage most of the resources and services they request, further enabling companies to purchase inexpensive systems.

Servers and Client requests

In this model, a distributed application structure partitions tasks or workload between the providers of a service or resource (servers), and service requesters (clients).

The architecture ensures the client computer can send requests for specific data to the server over the internet. As soon as the server accepts the request, the client receives the data packets requested from it. There is no sharing of resources between clients.

This approach is also called the client server network or networking computing model. And the stage is set for the revolution that comes next.

The Dot Com Era — 1990s

Right at the start of the decade, General Magic engineers were thinking about mobile computers and always-on computing — in one word, smartphones. Later in 1996, General Magic’s Telescript programming language enables first-generation mobile devices to exchange information with services across a network.

There’s a lot happening now.

- In 1993, Andy Hertzveld of General Magic uses the term “cloud” to describe remote services and applications.

- General Magic’s Magic Cap is an object-oriented operating system that caters to communications for personal digital assistants (PDAs).

- There’s a boom in personal computers, wired internet connections for both businesses and homes, and the idea of “cloud computing” with the cloud symbol has caught on.

- Telecommunications companies offer Virtual Private Network (VPN) services at a relatively low cost.

- In 1997, Emory University’s Professor Ramnath Chellapa defines the cloud and “grid computing” as a new “computing paradigm, where the boundaries of computing will be determined by economic rationale, rather than technical limits alone”.

- In 1999, VMware reinvents virtual machines technology for x86 systems, providing an ideal framework for cloud compute instances and other infrastructure resources used in early cloud services.

- In Japan, the NTT DoCoMo mobile phone company introduces mobile data networking standard, i-mode. It pioneers mobile web access, e-mail, streaming video, and mobile payments, a full decade before everyone else.

- The same year, Salesforce pioneers the idea of delivering software programs to the end users over the internet, heralding the early form of Software-as-a-Service (SaaS) cloud delivery model.

The late 1990s were a hive of activities that developed the technologies needed to usher in cloud computing’s next frontier — even with the Dot Com bubble burst.

Here’s a quick recap on some major milestones in the history of the cloud, courtesy of The Chartered Institute of IT:

Credit: The history of the cloud between 1960 and 2005 – CIIT

The Cloud Computing Era: 2000s — How The Everything Store Pioneered Cloud Computing With AWS

By 1999, Amazon was expanding its business beyond selling books online to include everything. But selling books online was nowhere near as challenging as selling stuff like clothes online.

While books are pretty much the same for everybody, clothes need to be a different color, size, and fit to different shoppers. And being a purely online store meant Amazon customers couldn’t just walk into a store and try the clothes on.

So, Amazon engineers had to code features for every element of that personalization.

(The audio clips below are from the podcast, “Cloud Atlas: How The Cloud Reshaped Human Life”. Find the full podcast here.)

They had to build features for color, size, fit, and whatever other customizable features their vendors offered, as Matt Round, then director of Amazon’s personalization team, explains.

Now take that customizability and multiply it by each of the vast number of items Amazon wanted to sell online, from electronics to personalized cups.

But if Amazon wanted people to prefer shopping online over the flexibility that walking into a physical store provided, it needed to exceed the optionality and flexibility of shopping in person. And they needed to do it fast.

Amazon software engineers needed to build capabilities that would enable customers to see their favorite items first, or items related to other items they’d purchased before or were currently in their shopping carts.

Here’s the problem, though.

The Monolithic Challenge

These were the 1990s, the Wild Wild West of the World Wide Web, as CloudZero’s CTO and cofounder, Erik Peterson, puts it.

Amazon.com was built on this monolithic architecture. A monolithic architecture is a traditional software development approach where engineers built a lot of features for multiple uses with one code base. Here’s why a monolithic architecture is so challenging.

A monolith also operates on its own. It does not integrate or communicate with other services, as Michael Skok, Founding Partner at Underscore VC, explains.

This architecture was extremely limiting to Amazon’s rapidly growing business, to put it mildly.

The Microservice Solution

Amazon had to break up its monolithic architecture into microservices. Here’s what microservices mean.

Another challenge was that if you wanted to build a piece of software, you had to start from scratch each time.

Long story short, In 2002, Amazon engineers came up with the idea of a framework where they could share different resources online, including code so they wouldn’t need to write everything from scratch.

By 2006, they came up with the rentable cloud infrastructure, where users could pay a monthly or annual fee to avoid the hassle of buying, installing, and maintaining their own data centers around the clock. Instead, Amazon Web Services (AWS) engineers would do all that in the background, hearalding the Infrastructure-as-a-Service (IaaS) era.

Customers could use:

- Simple Storage Service (Amazon S3) for flexible online storage

- Elastic Compute Cloud (Amazon EC2) for on-demand processing, memory, and networking capacity on a pay-as-you-go pricing model, and later

- Relational Database Service (Amazon RDS) for their structured data

Over the next couple of years, Microsoft Azure, IBM, Oracle Cloud, Google (originally with Docs and Spreadsheets) join the IaaS boom. Others, like OpenStack, pioneer the Platform-as-a-Service (PaaS) delivery model.

The Era of Microservices and Containers: 2010s

Cloud computing is advancing at a rapid pace:

- In 2010, Microsoft Azure and AWS develop fairly functional private clouds internally. OpenStack blows them out of the water with a Do-it-yourself private cloud.

- In 2011, IBM launches SmartCloud while Apple delivers the iCloud. The concept of hybrid clouds (connecting public and private clouds) is bubbling under. NIST provides the reference architecture for cloud computing.

- In 2012, Oracle Cloud delivers all cloud computing models (IaaS, PaaS, and SaaS) from a single cloud service provider. CloudBolt pioneers a hybrid cloud management platform, easing management across private and public clouds. Non-relational database services become available. Cisco pioneers edge computing.

- In 2013, Docker launches, helping developers create lightweight, resource-efficient, and portable software containers compared to earlier forms (Solaris Containers from 2004). This makes it easier to develop, test, and deploy applications in the cloud.

- In 2014, after years of development and testing as Borg, Google releases Kubernetes (K8s), an open-source container orchestration platform for Docker images, with self-healing capabilities.

- K8s goes on to dominate container management in public clouds and elsewhere. AWS introduces Lambda to support serverless applications. Real-time streaming services, like Netflix, are processing data on the cloud.

- In 2015, Machine Learning (ML) services and Blockchain-as-a-Service are available on the cloud.

- In 2016, cloud-based Internet of Things (IoT) becomes available and microservices are supporting cloud-native application development.

- In 2017, the OpenFog Consortium defines decentralized cloud reference architecture.

- In 2018, Google Cloud Platform (GCP) launches Tensor Processing Units (TPUs) in the cloud. Microsoft launches an underwater data center.

Between 2019 and 2022, the Covid-19 Pandemic leads to rapid adoption of cloud computing as businesses implement remote work, online video conferencing, and pay-as-you-go pricing to optimize operational costs.

Next-Generation Cloud: 2023 And Beyond

Cloud adoption continues to grow across all industries. Gartner predicts investments in the public cloud, the most popular cloud deployment model, will increase across the board, by more than $100 billion over 2022 numbers.

Credit: Public cloud spending growth in 2023 – Gartner

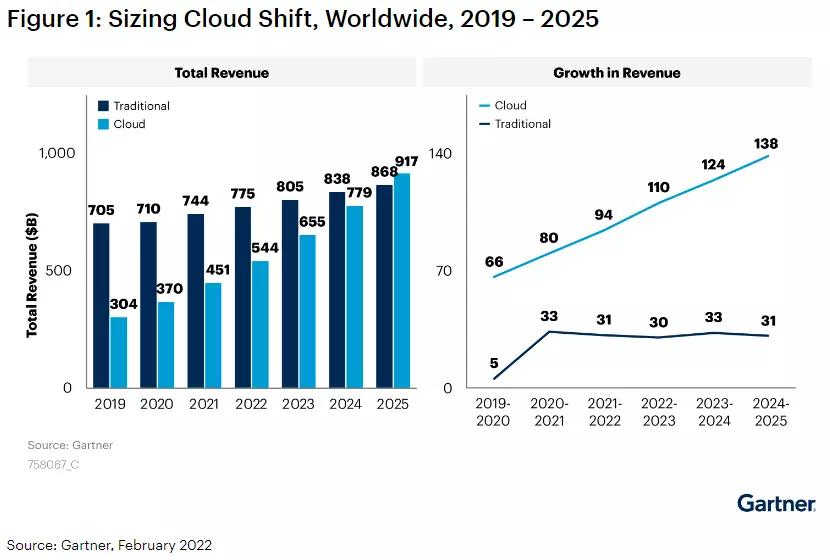

Gartner also forecast that IT spending in the cloud will surpass traditional IT spending from 2025.

Credit: Traditional computing vs cloud computing comparison by Gartner

Currently, the following developments are taking place:

- Using hybrid clouds and multi-clouds is getting smoother as more robust cloud management solutions improve visibility, infrastructure monitoring, Application performance management, real-time cloud cost anomaly detection and optimization, and more.

- Serverless computing is gradually enabling companies to use cloud resources only when needed, which should help reduce cloud costs.

- Platforms like CloudZero are shifting cloud cost management left, enabling engineers (rather than just finance and FinOps) to see the cost impact of their architectural decisions in near-real-time, so they can build cost-effective solutions.

- 5G, IoT, and other application demands are driving advanced cloud capabilities closer to users and businesses as the cloud becomes increasingly distributed.

- Quantum computing will drastically improve performance and security characteristics of future cloud computing.

Overall, the future of cloud computing is exciting and full of promise. And CloudZero will be there every step of the way.

With CloudZero’s cloud cost intelligence approach, you can understand, control, and optimize your cloud computing costs — using dimensions you actually care about. Think: cost per customer, per project, per team, per environment, per hour, per software feature, per product, etc. — all without endless tagging.

Want to see how? It’s easy.  to see how brands like Drift use CloudZero to save over $2.4 million annually.

to see how brands like Drift use CloudZero to save over $2.4 million annually.