Kubernetes is increasingly becoming the standard way to deploy, run, and maintain cloud-native applications that run inside containers. Kubernetes (K8s) automates most container management tasks, empowering engineers to manage high-performing, modern applications at scale.

Meanwhile, several surveys, including those from VMware and Gartner, suggest that inadequate expertise with Kubernetes has held back organizations from fully adopting containerization. So, maybe you’re wondering how Kubernetes components work.

In that case, we’ve put together a bookmarkable guide on pods, nodes, clusters, and more. Let’s dive right in, starting with the very reason Kubernetes exists; containers.

Quick Summary

Pod | Node | Cluster | |

Description | The smallest deployable unit in a Kubernetes cluster | A physical or virtual machine | A grouping of multiple nodes in a Kubernetes environment |

Role | Isolates containers from underlying servers to boost portability Provides the resources and instructions for how to run containers optimally | Provides the compute resources (CPU, volumes, etc) to run containerized apps | Has the control plane to orchestrate containerized apps through nodes and pods |

What it hosts | Application containers, supporting volumes, and similar IP addresses for logically similar containers | Pods with application containers inside them, kubelet | Nodes containing the pods that host the application containers, control plane, kube-proxy, etc |

What Is A Container?

In software engineering, a container is an executable unit of software that packages and runs an entire application, or portions of it, within itself.

Containers comprise not only the application’s binary files, but also libraries, runtimes, configuration files, and any other dependencies that the application requires to run optimally. Talk about self-sufficiency.

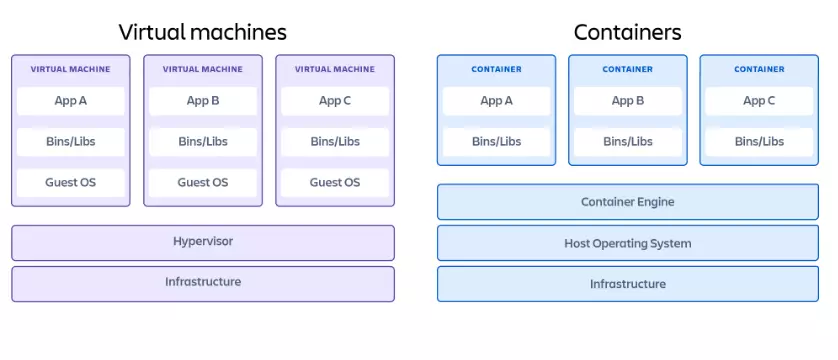

Credit: Containers vs virtual machine architectures

This design enables a container to be an entire application runtime environment unto itself.

As a result, a container isolates the application it hosts from the external environment it runs on. This enables applications running in containers to be built in one environment and deployed in different environments without compatibility problems.

Also, because containers share resources and do not host their own operating system, they are leaner than virtual machines (VMs). This makes deploying containerized applications much quicker and more efficient than on contemporary virtual machines.

What Is A Containerized Application?

In cloud computing, a containerized application refers to an app that has been specially built using cloud-native architecture for running within containers. A container can either host an entire application or small, distributed portions of it (which are known as microservices).

Developing, packaging, and deploying applications in containers is referred to as containerization. Apps that are containerized can run in a variety of environments and devices without causing compatibility problems.

One more thing. Developers can isolate faulty containers and fix them independently before they affect the rest of the application or cause downtime. This is something that is extremely tricky to do with traditional monolithic applications.

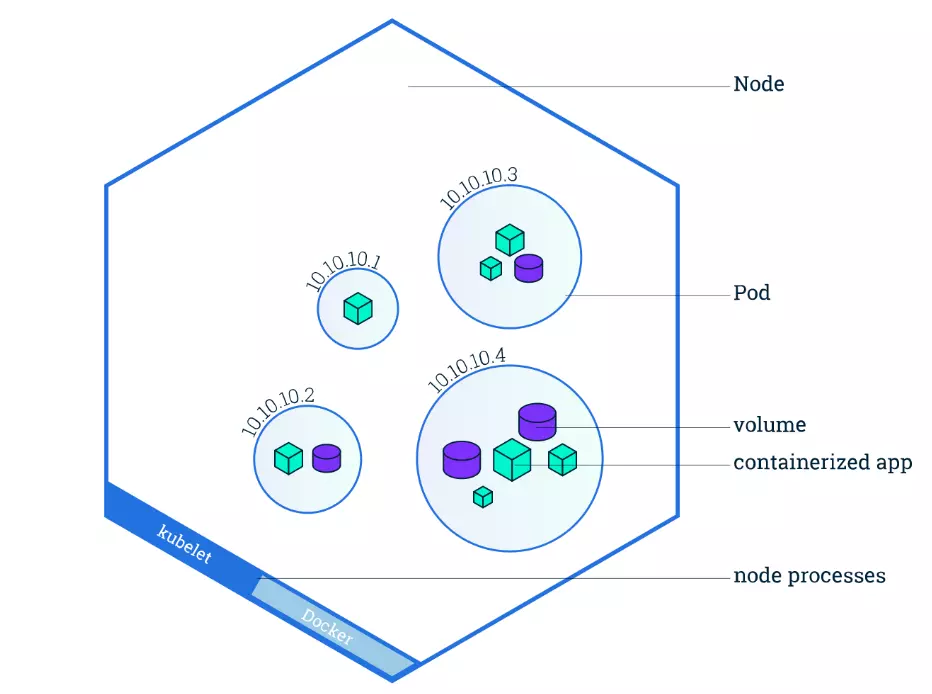

What Is A Kubernetes Pod?

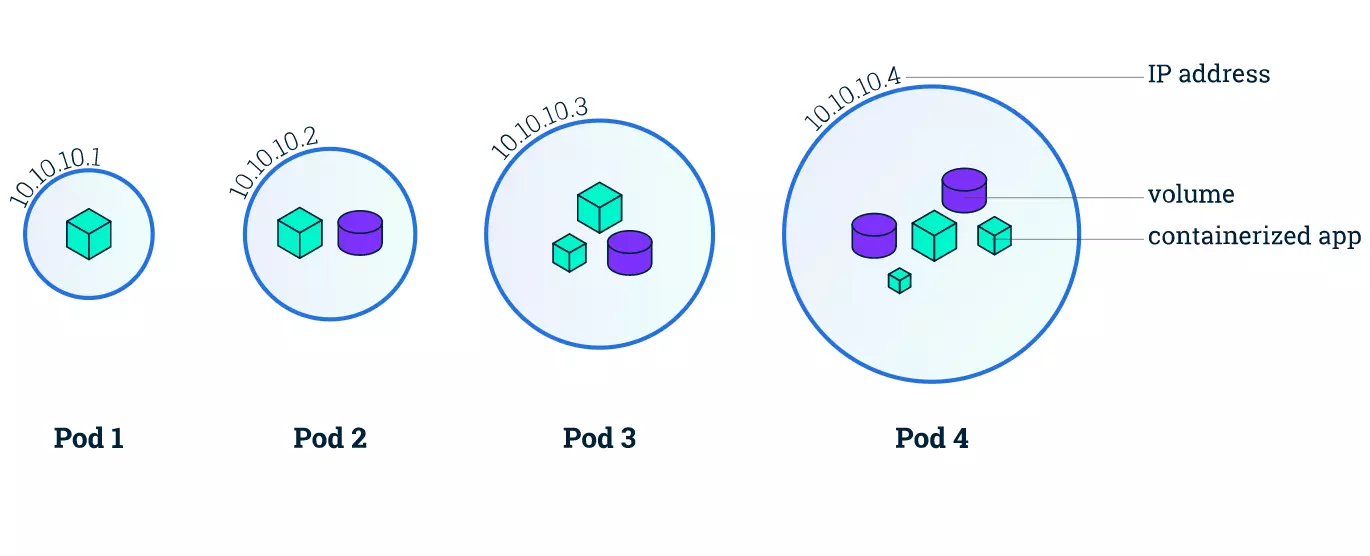

A Kubernetes pod is a collection of one or more application containers.

The pod is an additional level of abstraction that provides shared storage (volumes), IP address, communication between containers, and hosts other information about how to run application containers. Check this out:

Credit: Kubernetes Pods architecture by Kubernetes.io

So, containers do not run directly on virtual machines and pods are a way to turn containers on and off.

Containers that must communicate directly to function are housed in the same pod. These containers are also co-scheduled because they work within a similar context. Also, the shared storage volumes enable pods to last through container restarts because they provide persistent data.

Kubernetes also scales or replicates the number of pods up and down to meet changing load/traffic/demand/performance requirements. Similar pods scale together.

Another unique feature of Kubernetes is that rather than creating containers directly, it generates pods that already have containers.

Also, whenever you create a K8s pod, the platform automatically schedules it to run on a Node. This pod will remain active until the specific process completes, resources to support the pod run out, the pod object is removed, or the host node terminates or fails.

Each pod runs inside a Kubernetes node, and each pod can fail over to another, logically similar pod running on a different node in case of failure. And speaking of Kubernetes nodes.

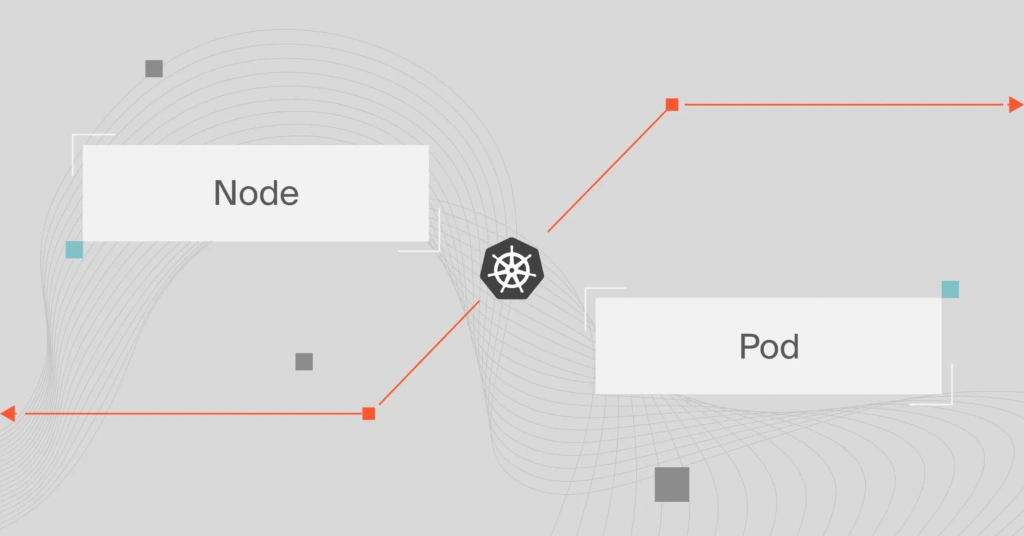

What Is A Kubernetes Node?

A Kubernetes node is either a virtual or physical machine that one or more Kubernetes pods run on. It is a worker machine that contains the necessary services to run pods, including the CPU and memory resources they need to run.

Now, picture this:

Credit: How Kubernetes Nodes work by Kubernetes.io

Each node also comprises three crucial components:

- Kubelet – This is an agent that runs inside each node to ensure pods are running properly, including communications between the Master and nodes.

- Container runtime – This is the software that runs containers. It manages individual containers, including retrieving container images from repositories or registries, unpacking them, and running the application.

- Kube-proxy – This is a network proxy that runs inside each node, managing the networking rules within the node (between its pods) and across the entire Kubernetes cluster.

Here’s what a Cluster is in Kubernetes.

What Is A Kubernetes Cluster?

Nodes usually work together in groups. A Kubernetes cluster contains a set of work machines (nodes). The cluster automatically distributes workload among its nodes, enabling seamless scaling.

Here’s that symbiotic relationship again.

A cluster consists of several nodes. The node provides the compute power to run the setup. It can be a virtual machine or a physical machine. A single node can run one or more pods.

Each pod contains one or more containers. A container hosts the application code and all the dependencies the app requires to run properly.

Something else. The cluster also comprises the Kubernetes Control Plane (or Master), which manages each node within it. The control plane is a container orchestration layer where K8s exposes the API and interfaces for defining, deploying, and managing containers’ lifecycles.

The master assesses each node and distributes workloads according to available nodes. This load balancing is automatic, ensures efficiency in performance, and is one of the most popular features of Kubernetes as a container management platform.

You can also run the Kubernetes cluster on different providers’ platforms, such as Amazon’s Elastic Kubernetes Service (EKS), Microsoft’s Azure Kubernetes Service (AKS), or the Google Kubernetes Engine (GKE).

Take The Next Step: View, Track, And Control Your Kubernetes Costs With Confidence

Open-source, highly scalable, and self-healing, Kubernetes is a powerful platform for managing containerized applications. But as Kubernetes components scale to support business growth, Kubernetes cost management tends to get blindsided.

Most cost tools only display your total cloud costs, not how Kubernetes containers contributed. With CloudZero, you can view Kubernetes costs down to the hour as well as by K8s concepts such as, cost per pod, container, microservice, namespace, and cluster costs.

By drilling down to this level of granularity, you are able to find out what people, products, and processes are driving your Kubernetes spending.

You can also combine your containerized and non-containerized costs to simplify your analysis. CloudZero enables you to understand your Kubernetes costs alongside your AWS, Azure, Google Cloud, Snowflake, Databricks, MongoDB, and New Relic spend. Getting the full picture.

You can then decide what to do next to optimize the cost of your containerized applications without compromising performance. CloudZero will even alert you when cost anomalies occurs before you overspend.

Schedule a demo today to see these CloudZero Kubernetes Cost Analysis capabilities and more!

Kubernetes FAQ

Is a Kubernetes Pod a Container?

Yes, a Kubernetes pod is a group of one or more containers that share storage and networking resources. Pods are the smallest deployable units in Kubernetes and manage containers collectively, allowing them to run in a shared context with shared namespaces.

What is the difference between container node and pod?

A node is a worker machine in Kubernetes, part of a cluster, that runs containers and other Kubernetes components. A pod, on the other hand, is a higher-level abstraction that encapsulates one or more containers and their shared resources, managed collectively within a node.

Can a pod have multiple containers?

Yes, a Kubernetes pod can have multiple containers. Pods are designed to encapsulate closely coupled containers that need to share resources and communicate with each other over localhost. This approach facilitates running multiple containers within the same pod while treating them as a cohesive unit for scheduling, scaling, and management within the Kubernetes cluster.

How many pods run on a node?

The number of Kubernetes pods that can run on a node depends on various factors such as the node’s resources (CPU, memory, etc.), the resource requests and limits set by the pods, and any other applications or system processes running on the node.

Generally, a node can run multiple pods, and the Kubernetes scheduler determines pod placement based on available resources and scheduling policies defined in the cluster configuration.