Data engineering. Data science. Machine learning. Data governance. These are just a few of the many capabilities that make Databricks a favorite among developers and data engineers.

Yet, most have one common complaint — its cost. Some say it’s too expensive, especially for small and medium-sized organizations. Others find it hard to justify using the platform due to budget limits. In addition, maintaining and running data warehouses can be costly.

Still, Databricks is one of the most robust data lakehouses available today.

So, in this article, we share several proven Databrick cost optimization tips you can implement to reduce spending.

What Is Databricks Used For?

Databricks is a data lakehouse that merges the strengths of data lakes and data warehouses into a single platform.

Data warehouses are ideal for structured data, but most businesses collect all kinds of data that warehouses can’t handle. Data lakes, on the other hand, store all types of data, which often end up being messy.

Databricks addresses this by organizing your data, regardless of its type, and ensuring it is ready for analysis, machine learning, or business decisions.

Yet, with all its power, Databricks isn’t without cost hurdles.

Databricks Pricing And Billing Challenges

The Databricks pricing model can be complex and frustrating for many users. Here are some common challenges:

Data complexity

Complex data and algorithms in Databricks consume more Databricks Units (DBUs). This means running advanced workloads such as machine learning or processing large datasets increases your compute costs. The more complex the task, the more resources it uses, which can cause bills to escalate.

Usage reporting limitations

DBUs measure the resources consumed by workloads. However, the built-in usage reports only show total costs per workspace. This makes it difficult for teams to figure out what’s driving costs or how to optimize their spending.

However, Databricks now offers system tables for billing, which give detailed insights into DBU usage by job. But there’s a catch — you need Unity Catalog enabled to access these reports.

High data storage costs

Data storage in Databricks often relies on cloud storage services such as AWS S3 or Azure Blob, which charge based on the amount of data stored and accessed. As datasets grow larger, costs increase. Frequent read-and-write operations, common in analytics and machine learning workflows, can add even more to the bill.

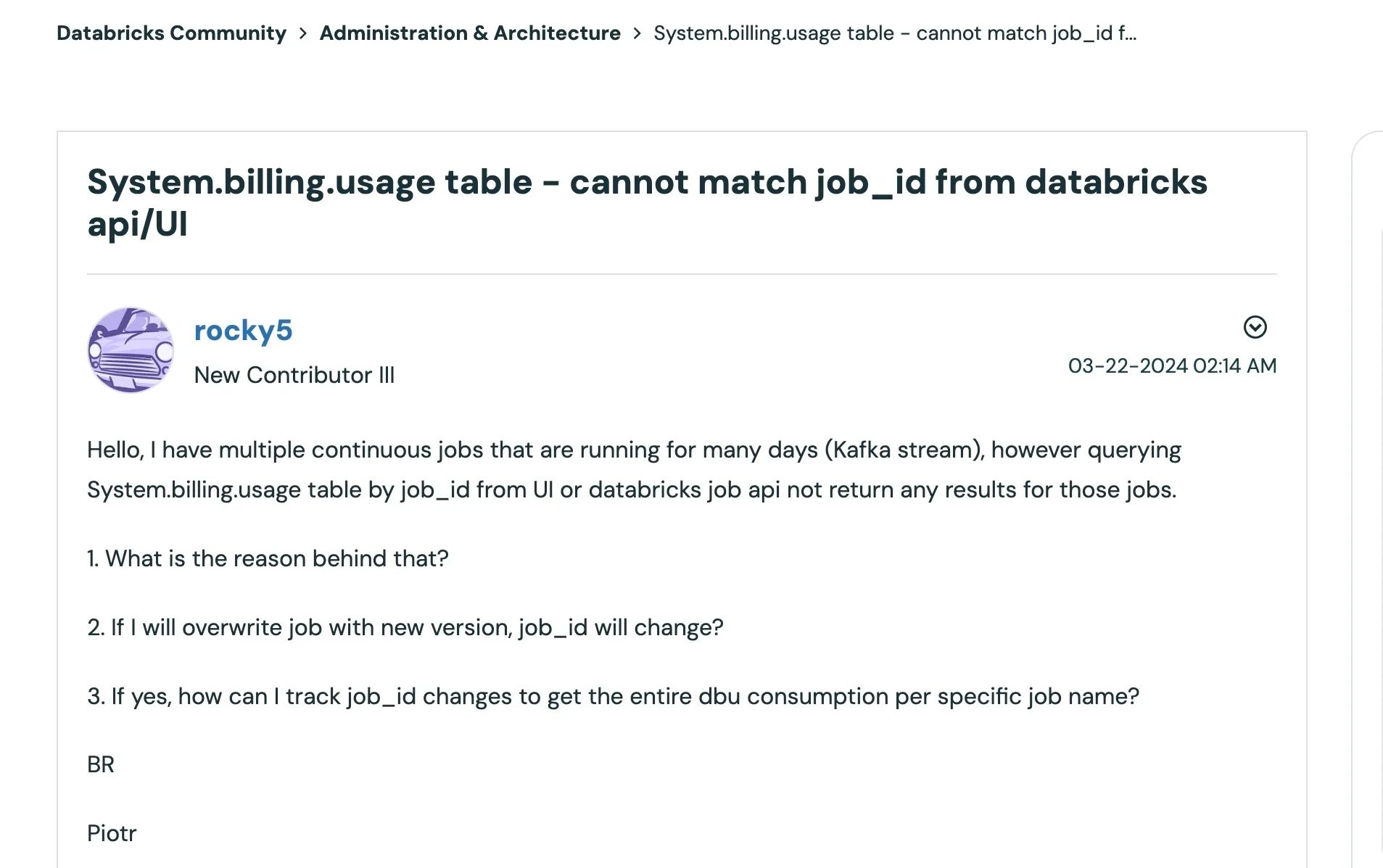

Difficulty in tracking long-running jobs

Continuous jobs, such as real-time data streams, can be difficult to monitor. Some users find that these tasks don’t appear correctly in Databricks’ usage tables, making it challenging to manage costs.

These and more can cause budget restraints, but they are effective ways to manage Databricks costs.

8 Databricks Cost Optimization Strategies

These Databricks cost optimization will help you make the most of the platform without draining resources.

1. Monitor usage with built-in and external tools

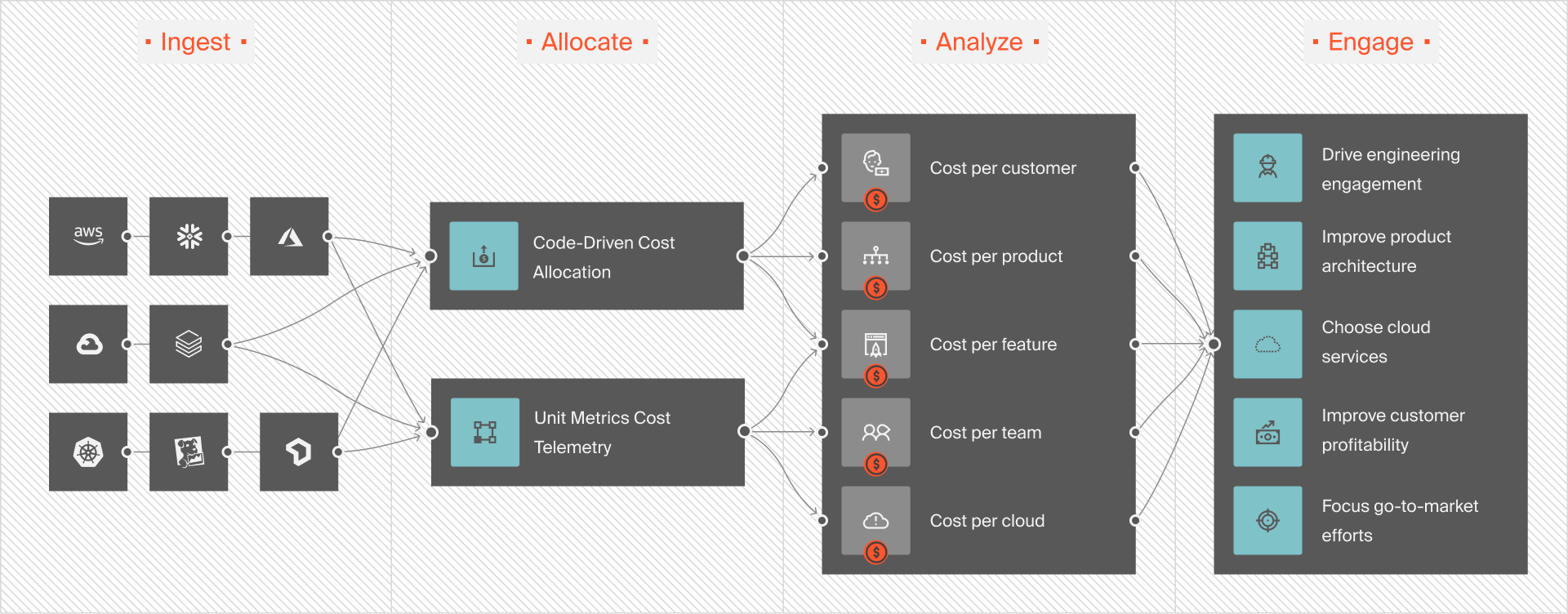

Use system tables to track Databricks Unit consumption and resource usage. For deeper insights, connect with tools like CloudZero to break down costs by team, project, and more.

Frequent monitoring can also reveal idle clusters or jobs using too much compute power.

2. Optimize data storage

Cleaning up unused or obsolete data regularly is a simple way to control costs. Compressing data and using efficient storage formats, such as Delta Lake, further reduces storage costs while maintaining performance.

Implement data retention policies to delete outdated datasets automatically. This will minimize costs and ensure compliance with data governance standards.

3. Rightsize clusters for efficiency

Oversized clusters waste resources and drive up costs, while undersized clusters can cause jobs to run inefficiently and take longer to complete. Databricks offers an auto-scaling feature that dynamically adjusts the number of workers based on demand, helping to strike a balance between cost and performance.

Review your workload patterns and resource consumption regularly. Smaller jobs, such as reporting or simple ETL processes, may not need high-powered clusters. Analyze job metrics and rightsize clusters to avoid overprovisioning resources, which can lead to significant cost savings over time.

4. Enable cluster auto-termination

Idle clusters waste a lot of resources. Auto-termination shuts down clusters automatically after a set period of inactivity.

5. Schedule jobs during off-peak hours

Cloud providers often charge lower rates during off-peak times like nights and weekends. Scheduling resource-intensive jobs during these periods can lead to substantial savings. This is particularly effective for predictable workloads such as nightly ETL pipelines or scheduled analytics jobs.

6. Update Databricks runtimes

Updating Databricks runtimes helps you save costs by using the latest performance improvements. Newer versions often include better memory management, faster processing, and resource-efficient algorithms.

7. Optimize queries for reduced compute

Poorly optimized queries can consume excessive resources, driving up costs. Efficient query design reduces compute time and improves performance. Partitioning, caching, and indexing help cut resource consumption and speed up query execution.

8. Train teams on cost management

A well-informed team is key to effective cost management. Ensure your team understands Databricks’ pricing model, including how DBUs are consumed. Training should cover best practices for cluster usage, query optimization, and job scheduling to maximize efficiency.

Encourage team members to track their usage and share cost-saving tips. Building a culture of awareness and collaboration ensures everyone works toward reducing costs while staying productive.

If Databricks remains costly even after applying these strategies, here are 11 Databricks alternatives you can consider.

What Next?

Learn How To Optimize Your Databricks Costs With CloudZero

CloudZero integrates with Databricks by pulling data from system tables such as system.billing and system.compute. This integration enables businesses to track costs at a detailed, granular level, such as by job, team, or project.

CloudZero also integrates not just with Databricks but across an organization’s entire cloud infrastructure. This unified view compares costs across different platforms (AWS, Azure, GCP), giving a complete picture of the total cloud spend.

In addition, CloudZero continuously monitors Databricks usage for any unusual cost spikes. When a spike is detected, the platform triggers an alert, enabling teams to take corrective action before costs spike. This proactive monitoring ensures that users don’t miss unexpected billing increases, whether they come from inefficient cluster usage, idle resources, or inefficient queries.

FAQs

Why is Databricks so expensive?

Databricks charges based on the computer power your workload consumes. Big jobs like machine learning or large data processing require more power, resulting in higher bills. Poor resource management, such as oversized clusters or unused resources, can also increase costs.

Does Databricks offer tools for cost management?

Yes, Databricks provides built-in tools, such as system tables, to monitor DBU consumption and resource usage. Third-party platforms, like CloudZero, integrate with Databricks to offer detailed cost breakdowns by team, project, or workload.

What is a serverless SQL warehouse in Databricks?

A serverless SQL warehouse is a scalable compute option for SQL workloads in Databricks. It automatically adjusts resources based on query demand and charges only for active usage, making it ideal for cost-efficient business intelligence tasks.