For developers and programmers, Amazon Web Services (AWS) offers many benefits. It gives you access to the computing and DevOps tools you need at the press of a button — which helps you get products out the door fast.

However, it can be challenging to control your costs and identify waste. In this comprehensive guide, we will examine some practical steps you can take for AWS cost optimization.

In the first chapter, we’ll look at how to ensure that the right configurations are in place and the right data is available when the team is ready to start focusing on costs. Next, we will explore ways for avoiding common architectural waste. Finally, we’ll focus on creating the ideal scenario going forward — how you can control AWS costs proactively before excess costs accumulate.

Table of ContentsChapter 1: How To Set Yourself up for Success With AWS |

Chapter 1: How To Set Yourself up for Success With AWS

The first step to success with AWS — and controlling AWS costs — is to get the right configurations in place. In the sections below, we’ll dig into where you should focus for AWS cost optimization, and some important setup steps like tagging.

AWS Cost Optimization: Setting up for Success

There are a number of steps you can take on the front end to make it easier to track and manage your AWS costs. The following sections outline the steps and AWS tools you can use for AWS cost optimization.

Implement AWS Organizations and Consolidated Billing

To start off, if you’re not using AWS Organizations and consolidated billing, consider implementing these tools. AWS Organizations enables teams to automate account creation, create groups of accounts to reflect business needs, and apply governance policies for these groups. The consolidated billing feature within AWS Organizations lets teams consolidate payments for multiple AWS accounts. This will help you stay organized and consistent.

Create Separate AWS Accounts for Production and Development

Second, consider creating different AWS accounts for production and development. AWS will recommend going even further by creating separate accounts for each environment, segmented by feature or product. While that practice can simplify understanding of cloud spend, it can be challenging to manage at scale. As a general rule, separate out production workloads from development at a minimum. Based on organizational requirements, teams may decide to segment further.

Create a Company Tagging Policy

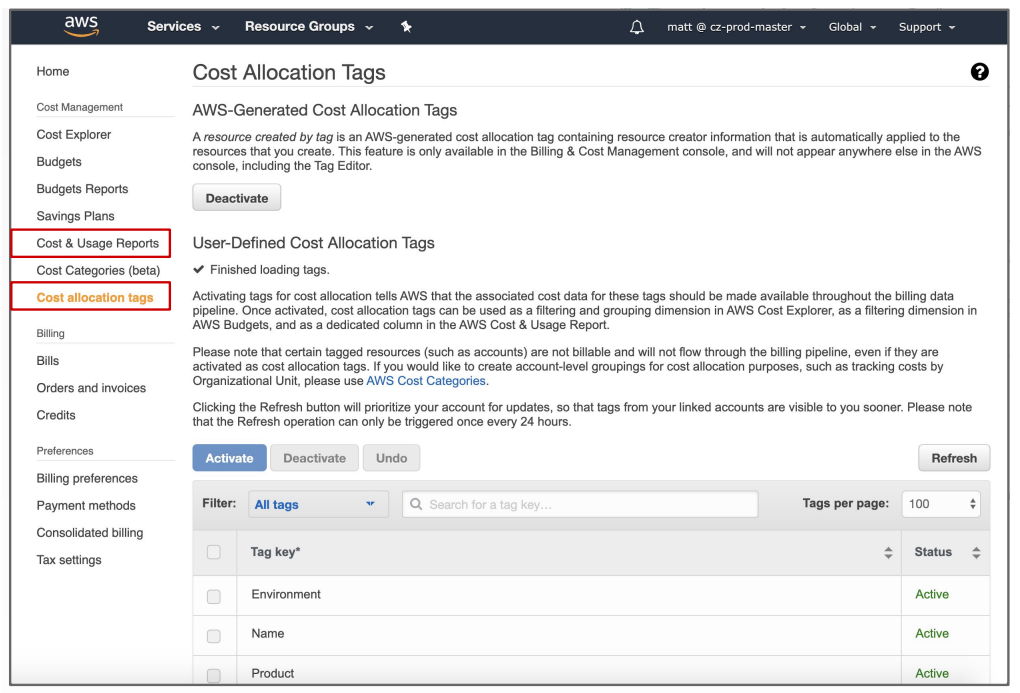

Next, focus on establishing a company tagging policy. Tagging will enable teams to identify, organize, filter, and search for resources within AWS. Tagging works best when there’s a company policy that sets expectations for engineering teams. There are many ways to make this process less manual by incorporating standard tags into your Terraform or CloudFormation templates.

Once the team has started tagging its infrastructure, activate important tags with cost allocation tagging, so they can be used with AWS Cost Explorer or cost management vendors. For example, enabling “AWS-Generated Cost Allocation Tags” creates a useful tag called “aws:CreatedBy” that shows which identity and access management (IAM) roles are creating resources.

For most organizations, tagging is a critical component for managing their cloud spend. If only it were easy to enforce! The first step to creating a tagging policy is to start setting expectations for engineering teams. The three most common tags to standardize are:

- Aligned with the service or application a resource supports

- Corresponding to the environment the resource is being deployed into

- Identifying the person or team responsible for the resource

Most development teams leverage some continuous integration/continuous delivery (CI/CD) and/or infrastructure-as-code tools, which are great ways to tag newly created resources. Unfortunately, there’s usually a manual process involved in tagging existing resources (starting with the most expensive ones). Throughout this process, don’t forget to tag supporting resources such as elastic block storage (EBS) volumes or snapshots associated with elastic compute cloud (EC2) instances. For teams looking for help maximizing their existing tags, tools like CloudZero can boost existing tag coverage.

Create an AWS Cost and Usage Report

Finally, create an hourly AWS Cost & Usage Report (CUR). Even if your organization is not planning to think about AWS cost optimization for another six months, the majority of the cost vendors need to ingest CUR data. AWS doesn’t back-populate this data — so having it ready is helpful and doesn’t cost much. When creating an hourly CUR, check the “Include resource IDs” checkbox and leave the rest as defaults.

For the level of granularity that many cost management vendors (like CloudZero) need, you may want to turn your setting to:

- Time Granularity: Hourly Report Versioning: Create New Report Version

- Compression: GZIP

- Include Resource IDs: ON

- Data Refresh Settings: Automatic

Use AWS Cost Explorer for AWS Cost Optimization

There are many useful features within AWS Cost Explorer for controlling costs. For example:

Use these to plan and manage reserved capacity, which can save up to 75% on the hourly rate compared to on-demand pricing.

If the company’s environment is relatively static and the team has good account segmentation, this resource can be helpful. However, it can quickly get complicated with different teams building different features.

A relatively new tool offered out of the EC2 area, AWS Compute Optimizer is a great resource to help identify EC2 waste.

Other AWS services help with understanding and optimizing infrastructure. The Config feature helps inventory your resources, and Trusted Advisor provides proactive recommendations to help optimize your AWS environment. Unlike the free resources listed above, costs for these services can add up depending on your environment.

CloudZero takes AWS cost optimization to the next level, with real-time data correlating costs to activities so you can zero in on problem areas quickly. Sign up for a free demo to see how.

Chapter 2: How To Avoid Common Architectural Waste

Just about every growing company has incurred technical debt—the result of taking shortcuts in order to achieve more rapid gains — at some point. Inevitably, some development choices may have made sense at the time or were a quick fix to an urgent problem, but aren’t scaling with the business. These things aren’t necessarily quick to fix and have to be planned out, just like any other tech debt reduction activity.

So why does tech debt build up?

- There are always time constraints on engineering and usually competing priorities.

- Engineers don’t have visibility into the cost of features they’re building, or the tools to give them relevant and timely cost feedback.

- Last and most strategic, cost isn’t usually a non-functional requirement considered during the design phase, such as performance or security.

Imagine if an engineering team knew their service couldn’t cost more than $1,000 per month based on a certain production load. It would certainly impact the way they designed their system. Engineers thrive on data, but they often don’t have access to cost requirements or cost feedback — which has to change.

Technical debt sometimes occurs in the realm of cloud governance and the organization of resources. Specifically, we often see AWS cost optimization opportunities in a few common areas: Snapshots, Previous Generation Compute, Network Address Translation (NAT) Gateway, Amazon Simple Storage Service (S3), AWS Management Services, and DNS Queries.

1. Snapshots

Snapshots are often used as a backup storage system in AWS. Nearly all companies spend money on snapshots, which isn’t a problem in and of itself.

However, if the team is spending 5–10% of the monthly bill on snapshots or has snapshots older than 90 days, this is an area of concern.

In many cases, snapshot costs build up because there aren’t non-functional requirements around backup and recovery. If conservative defaults are left in place or no explicit lifecycle policies are created, costs can easily accumulate. For applications built before 2018, snapshots had to be managed manually, which also leads to problems in some organizations.

How to reduce AWS cost for snapshots:

If possible, use the AWS Data Lifecycle Manager to automate snapshots. Then, change the retention period on existing snapshots, depending on the business’ requirements for the application or service. Typical retention periods may range from 7 to 14 days. If the team has snapshots older than 90 days, consider moving them to a lower-cost storage solution such as AWS Well-Architected framework.

AWS WELL-ARCHITECTED FRAMEWORK

2. Previous Generation Compute

Compute running on legacy AWS infrastructure is referred to as “previous generation compute.” It’s not always an easy area of cost tech debt to remedy.

If an application or an account is spending more than 10% of resources on previous generation hardware, this may be an area to remediate.

There are two main causes of previous generation compute, both of which speak to why it’s so complicated to fix:

- Once an application or service is in maintenance mode, moving to new hardware requires a lot of testing.

- Many times companies have previous-generation compute tied up in reserved instances, and they don’t want to lose the coverage.

How to reduce AWS cost for previous generation compute:

If the business can migrate to the current generation hardware, companies can usually see cost reductions of 5–20%, as well as performance improvements. However, you need to proceed with caution. Updating an instance can require more effort than anticipated and have unintended consequences when upgrading to newer versions.

3. NAT Gateway

Amazon’s NAT Gateway makes it easy to securely connect to the internet from a private subnet in a VPC. Companies pay for usage hours and for gigabytes passing through the gateway. Many people use it because it’s a simple managed service.

If NAT Gateway costs exceed 5% of a feature or an account’s spend, this is an area of savings opportunity.

Data transfer within AWS is complicated, and it’s hard to understand how much data is going to traverse the gateway at scale. This can lead to architectures that have excessive NAT Gateway charges.

How to reduce NAT Gateway costs:

There’s an easy way to reduce NAT Gateway — the five steps are outlined within this blog post. The process involves first using FlowLogs to analyze data transfer through the gateway and using VPC endpoints, whenever possible, depending on the traffic and architecture.

HOW TO REDUCE NAT GATEWAY COSTS

4. Amazon Simple Storage Service (S3)

When most people think about S3, they think primarily about storage. There are actually three different cost drivers for S3 buckets: storage, api activity, and data transfer.

Typically, if the company is spending more than 10% of its AWS budget on S3, there may be areas to optimize costs.

S3 is one of Amazon’s oldest, most widely used services with broad applications. This broad applicability can lead to costly S3 buckets. There are also a number of storage classes that not everyone understands, and the idea of intelligent tiering is fairly new.

How to reduce AWS S3 costs:

If storage drives S3 costs, turn on S3 data analytics for the bucket. This feature comes at a minimal cost, but after a few days, AWS will make recommendations on how to optimize storage tiers.

If API activity or data transfer drives S3 costs, it’s a bit harder to diagnose. Analyze the activity hitting the bucket, and work with engineering to explore optimizations.

If data transfer drives the cost, explore the cache-control headers on the files within the buckets. Oftentimes engineering must explore what’s causing all the data transfer.

Beware lifecycle policies that migrate data to AWS Glacier on rapidly changing transactional data. The costs alone for just transitioning data to Glacier will dwarf the cost savings you gain from Glacier’s less expensive storage tier.

You should consider the use cases of your system closely when you are working with S3. S3 is absolutely the lowest cost option for storing data, but if you have data that is very transactional and subject to high volume access, ensure caching is implemented correctly on your frontend, or consider using a managed database like DocumentDB or DynamoDB to serve the data instead.

5. AWS Management Services

AWS Management Services are used to help understand other services or resources. These typically include CloudTrail, CloudWatch, Amazon Macie, and Config.

If your accounts spend more than 10–20% on AWS Management Services, there may be optimization opportunities.

The following are three causes of overspend on AWS Management Services, paired with the steps to address it:

- For CloudTrail specifically, spending more than a few hundred dollars per month can usually signal waste. Organizations get one free trail per account, and after that are charged per trail. In most cases you only need one organizational CloudTrail, so explore removing extraneous ones.

- CloudWatch varies more per organization. Cloud-native applications will spend more on CloudWatch, which drives higher percentages. High CloudWatch charges are usually a result of a few expensive logs and/or something making excessive ‘getMetricRequest’ API calls. Third-party services can drive these costs up. The solution involves looking at CloudTrail to identify the problematic actor, and then working with engineering to understand what is happening.

- Config’s cost scales based on the number of resources in an account and rules configured. It can be higher than people anticipate, especially for more dynamic environments. Config is easy to analyze based on the amount the company is paying per month relative to the value Config provides.

6. DNS Queries

Amazon’s Route 53 service, among other things, connects user requests to infrastructure running on AWS. Engineering teams don’t always realize they’re paying for DNS queries.

There’s no hard and fast symptom here, as costs can range greatly. As a general rule, companies can optimize if they’re spending more than $250 per month on DNS Queries.

The main driver of DNS costs is usually an org with lots of publicly available infrastructure. Occasionally, companies spend hundreds or thousands of dollars per month performing DNS lookups (which equates to billions of requests).

How to reduce costs for DNS queries:

Work with engineering to understand why so many requests are being sent. DNS queries can usually be optimized (not removed), once identified.

Chapter 3: How To Proactively Control AWS Costs

The ideal is to proactively control AWS costs rather than address tech debt cost later; the former is a better long-term solution. Following are some strategies around how to develop a cost-conscious engineering team that has the right tools and a thorough understanding of how to use AWS optimally.

Embrace AWS Cost-Conscious Engineering

Engineers make buying decisions daily, whether by the architectures they design or instances they start. The only way to strategically control cost is to empower engineering teams and hold them accountable for the cost, just like they’re accountable for performance and security. The AWS Well Architected Framework has a cost optimization section you can reference.

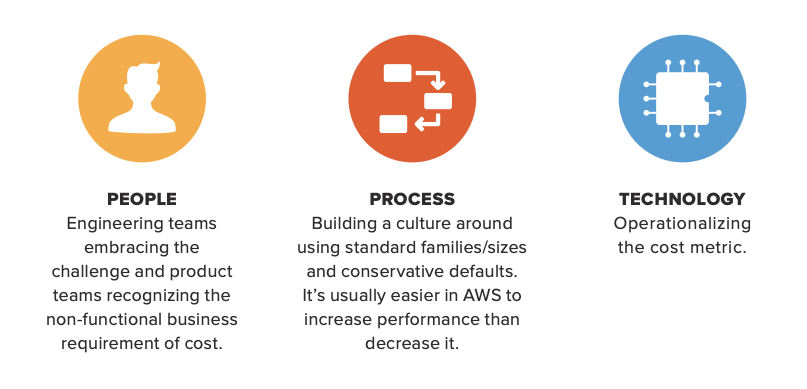

Link People, Processes, and Technology for AWS Cost Optimization

AWS cost optimization is a strategy that relies on people, processes, and technology working together to manage costs. The people must be aware of the costs associated with their activities and the solutions they devise, and the process must make recognizing and controlling costs a key objective. Technology must support both the people and process by providing the information needed to make timely decisions based on an understanding of the associated costs.

Manage Your Cloud Investment

Companies should be proactive about managing their cloud investment, not just their cloud costs. Change is a constant with AWS, and organizations struggle to understand what cost changes are meaningful to focus on. Increasing costs aren’t necessarily a problem, if the revenue for a product or feature is increasing. It all starts with understanding how much your features and products cost.

Aligning costs to features and products has traditionally been very difficult for a number of reasons, including inadequate tagging and the presence of numerous accounts. There’s also the reality of shared infrastructure and containerization, which make apportioning cost to specific features or products non-trivial. These challenges need to be addressed before the company can understand the costs of features or products.

Top 3 Causes of Tech Debt Waste

- Idle EC2 Instances: AWS EC2 provides secure and reliable compute capacity in the cloud.

- Idle RDS Instances: AWS RDS is a relational database service. Like EC2, it is one of the most popular AWS services.

- Unattached EBS Volumes: AWS Elastic Block Store is a service commonly used with EC2 for both throughput and transaction-intensive workloads. With the default configuration data, EBS does not get deleted when an instance is terminated.

The top three causes driving your AWS costs can be addressed with the following steps:

- Use the EC2 compute optimizer to right-size.

- Use CloudWatch to identify:

- Idle EC2: Low CPU, Memory and Network

- Idle RDS: No Connections and Low CPU

- Use EC2 → Elastic Block Store (EBS) interface to view a volume’s state.

- Before deleting anything, make sure that data is no longer needed. If so, take snapshots of data (lower cost storage option).

- Use EBS “terminate upon deletion” config option.

Adopt the Right Process and Tooling

At a tactical level, it really comes down to having the necessary process and tooling in place to be able to hold teams accountable for the cost of their features and products. Here are some examples of questions to ask various stakeholders:

- Engineers: “What is causing the cost of my feature to grow or shrink?”

- DevOps: “As our operations and customer base grows are our spends scaling linearly? Are there operational changes that are causing costs to spike?”

- Product Teams: Inquire about the costs of their products to support margin analysis. “Are the costs of our features/products trending acceptably per the business projections?”

Creating a cost-conscious engineering culture and understanding the costs of your features and products is the foundation for actively managing your cloud investment. This starts with providing the right context to the right teams. For product and engineering teams, this means showing them the costs of the features they are responsible for, so they can take ownership.

It also means they need the right context to understand the underlying sources of each cost. This can be accomplished in a number of ways, but solutions like CloudZero are purpose-built to help organizations adopt a proactive approach to AWS cost optimization by giving teams data on the cost of their features and products.

Take AWS Cost Optimization To the Next Level

In this guide, we’ve examined AWS cost optimization from setup through proactive cost control. Following these guidelines will help you get a handle on what is driving tech debt and how you can avoid it. But these steps can only take you so far.

CloudZero can help you zero in on the specific issues driving your AWS costs. It provides real-time, in-depth analysis of your cost data sorted by function, user, time, and other metrics — making areas of waste easy to pinpoint.

CloudZero provides the context you need for making decisions about how to reduce your AWS bill. By organizing your costs and correlating them to the underlying engineering activity, you can quickly detect anomalies before costs accumulate.

Even better, CloudZero makes costs transparent during the design phase, so your engineers can make informed decisions and build cost-effective computing into your application — eliminating the need to address tech debt further down the road.

CloudZero includes these unique features to give you maximum control over your AWS costs:

- Machine learning to classify costs: The CloudZero platform normalizes and correlates data, then uses machine learning to sort and associate costs with the processes that generate them.

- Unit cost analysis: CloudZero aligns cost to your business and helps you report on key business metrics, such as cost per customer, transaction, message, and more.

- Engineering-level context: CloudZero collects and correlates cost and engineering data, so you can see the source of a cost in minutes.

- Automated tagging assistance: Without manual input, CloudZero organizes your resources by product, feature, and team.

- Relevant team views of cost data: The CloudZero platform sorts data by product, feature, or team and sends alerts directly to the appropriate team’s Slack channel.

- Automatic anomaly alerts: Machine learning automatically determines which anomalies need to be addressed and sends an alert to the appropriate team.

- Real-time, granular cost data: Other cost optimization tools pull data every 24 hours; CloudZero updates data billions of times per second, from 74 different AWS service plug-ins and data streams like CloudTrail and CloudWatch.

Ready to take AWS cost optimization to the next level?

CloudZero is the only cloud cost intelligence solution that automatically correlates both billing and resource data from across your AWS account to group costs and surface insights. Instead of wasting time to determine what is driving cost anomalies, you can get to work immediately on addressing them. Sign up for a free demo to see how CloudZero can help your company optimize your AWS costs.