Cloud-native applications continue to evolve and grow in complexity. And that complexity hurts the most when managing Kubernetes costs in Azure. AKS cost optimization may seem obvious, but it might also seem difficult to achieve.

Microsoft’s fully managed Kubernetes service can help you run, manage, and deploy containerized applications. And while it optimizes performance, it can cause unexpected costs when improperly managed.

We’ve already shared seven cost optimization strategies for EKS and 12 cost optimization best practices for GKE.

In this guide, we’ll share practical tips to help you optimize your AKS costs without losing your mind.

What Is Azure Kubernetes Service (AKS)?

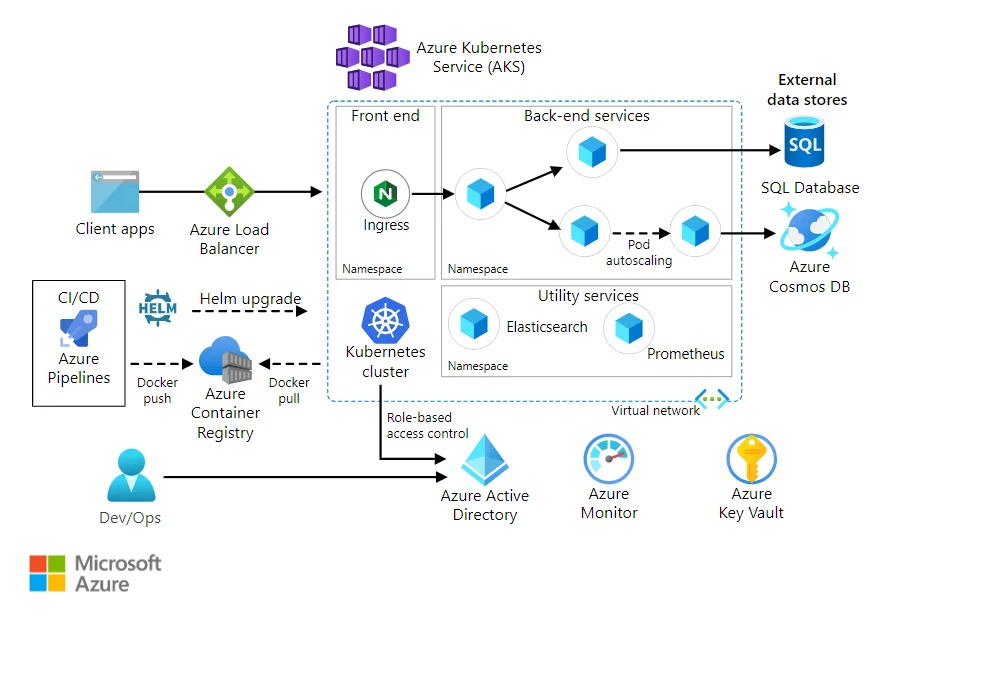

Microsoft’s Azure Kubernetes Service is an enterprise-grade managed Kubernetes platform. AKS is a highly available, portable, and scalable way to run containerized workloads in cloud, on-premises, and edge environments.

Credit: Microservices architecture on AKS

AKS simplifies cluster deployment and management by automating complex tasks, such as provisioning, patching, and scaling your infrastructure. The Microsoft Kubernetes service also features automatic scaling, rolling updates, and self-healing, simplifying your work further.

AKS is also the top alternative to Amazon’s Elastic Kubernetes Service (EKS) and Google Cloud’s Google Kubernetes Engine (GKE). However, AKS offers several advantages over EKS and GKE in several key areas, which you’ll recognize seeing that you already use it:

- Seamless integration with the Microsoft ecosystem: If you already have licenses for Azure cloud services such as MySQL Server, Microsoft 365, and other MS services, the integration with the managed K8s cluster platform is seamless.

- Unifies management with Azure Arc: The tool helps you manage Kubernetes clusters across different environments from a single interface, a significant advantage over EKS and GKE. AKS integrates natively with other Azure tools as well. Think; Azure Policy, Azure Active Directory, Azure Monitor, and Azure Container Registry. This provides a unified approach to running containerized applications on the Azure cloud.

- Cost-effectiveness: AKS pricing is based on the infrastructure used. EKS, on the other hand, charges for Kubernetes master node operations. See our AKS vs EKS cost-saving features guide here for more details.

- Customization: AKS offers more customization options than EKS, which has a more streamlined setup process but limits customization.

As with EKS and GKE, pricing for AKS can get murky. And it’s difficult to optimize what isn’t as clear as you’d like yet (aka AKS pricing). That said, let’s begin by breaking down how AKS pricing works. We’ll follow that up with practical tips to optimize AKS costs starting immediately.

What Are The Different AKS Pricing Models Available Today?

Azure Kubernetes Service offers several pricing options; Free tier, Pay-as-you-go, Azure Savings Plans, Azure Reserved VMs, and Azure Spot VMs. This provides flexibility for different usage scenarios.

Here’s a deeper dive into each of the current AKS pricing models real quick:

- Free Tier: You get a $200 credit for 30 days, allowing you to test it out for small-scale dev and testing projects. You also get free but limited access to multiple other Azure products for 12 months.

- Pay-As-You-Go: Here, charges are based on resource consumption, such as the number of nodes and VM sizes you use per second and any additional services. No long contracts or upfront payments are required, and you can scale your usage up or down as your needs change. You automatically transition to this Azure pricing option after exhausting your Free tier.

- Reserved Instances: While the pay-as-you-go option offers massive flexibility, it is also the most expensive. The alternative is to commit to a one-year or three-year term in exchange for a lower price.

- Spot VMs: AKS lets you run workloads on spare Azure capacity at a discounted price, offering up to 90% off the pay-as-you-go rate. However, these VMs can be evicted at any time when Azure needs the capacity back, making them less suitable for critical applications.

- Savings Plans: Savings Plans let you commit to spending a fixed hourly amount for 1 or 3 years. You can save up to 65% compared to the pay-as-you-go pricing option.

We are already discussing Azure pricing options that offer the biggest cost savings. Here are more details and other AKS cost optimization strategies you can implement immediately to maximize your ROI.

AKS Cost Optimization Techniques To Apply Immediately

The following AKS cost optimization best practices can help you reduce waste and make the most of AKS.

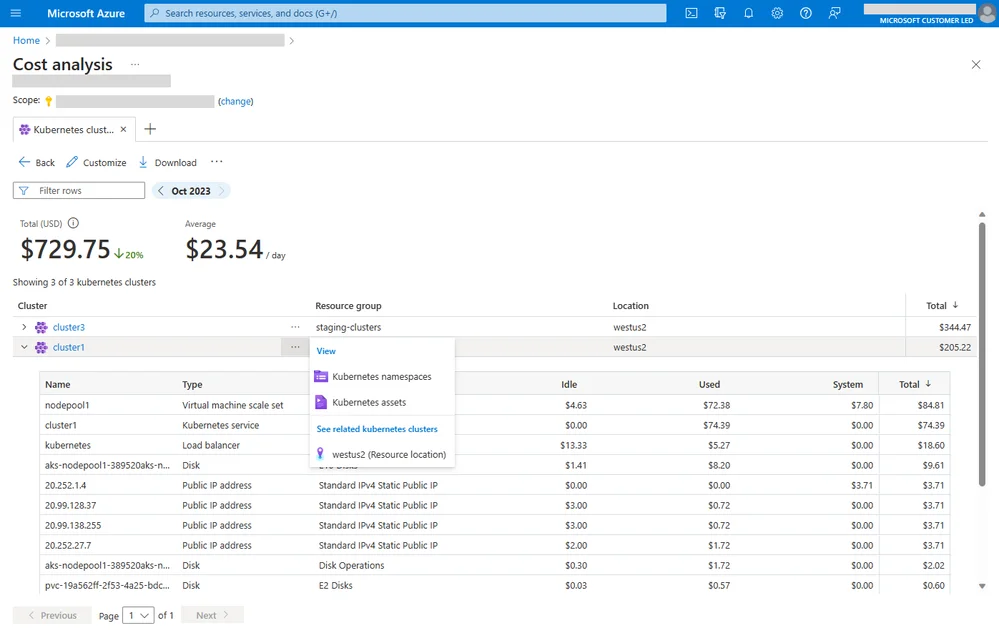

1. Use the AKS Cost Analysis add-on

The add-on provides AKS-specific cost breakdowns. Built on the vendor-neutral OpenCost project, the AKS cost analysis add-on offers a more granular way to analyze your Kubernetes cluster costs on Azure.

You can view costs by namespace and cluster. You can also apportion shared costs (such as service SLA and system reserved costs) and allocate them uniformly, by fixed percentage, and proportionally.

Credit: Azure

2. Collect, monitor, and allocate 100% of your Azure Kubernetes Service costs

You’ll want to start with leveling up your AKS cost analysis. Knowing your AKS costs helps you see what trade-offs you can make without sacrificing performance, security, or customer experience.

Azure Cost Management provides high-level views of your AKS costs, and Azure Advisor provides tailored recommendations for cost savings. The majority of cost tools struggle to ingest, enrich, and deliver 100% of Kubernetes cost insights. Using a platform like CloudZero to optimize AKS will help you do that.

With CloudZero, you’ll do more than get high-level cost insights such as total and average costs. You’ll get even more granular, immediately actionable cost intelligence such as cost per customer, team, environment, and service.

By identifying who, what, and why your AKS spend is changing, you can tell where to focus your cost optimization efforts to prevent overspending.

There’s more. This knowledge can help you make informed decisions about resource allocations and investments. Further, it can also help you create a budget plan that is realistic and achievable.

3. Rightsize your AKS pods/containers

As soon as you understand how much resources you need to achieve your goals, and without compromising performance, it is time to train your guns to target waste areas. Adjust the resources allocated to containers to match their actual resource requirements.

Specific resources you can rightsize in AKS include CPU and memory allocations for containers, persistent volumes, and storage classes for data storage. You can avoid overprovisioning and minimize unnecessary costs by accurately matching resource requirements to actual needs.

4. Run predictable workloads with Reserved Instances

Using AKS reservations can potentially save you over 72% compared to the standard or pay-as-you-go rate.

What you need to do in return is to commit to using a fixed amount of resources per hour for a 1- or 3-year period. For example, if you need to run an application that is (almost) always going to need 2 vCPUs and 8 GB of RAM, you can reserve an AKS instance with that configuration and never have to worry about running out.

Reserved AKS instances also help to ensure that you always have the necessary resources on hand for predictable workloads.

One more thing. To maximize cost savings, ensure that your reserved instances and savings plans are aligned with actual demand. CloudZero has partnered with ProsperOps to help you with this.

ProsperOps automates portfolio management for RIs and Savings Plans. This integration ensures that as a CloudZero user, you can:

5. Monitor your Effective Savings Rate (ESR)

This will give you insights into the ROI of cloud discount tools, such as RIs and SPs, helping you decide if you should change or renew your commitment.

6. Take advantage of personalized, expert optimization guidance

Our team will set you up with ProsperOps. A dedicated ProsperOps and CloudZero team will guide you through a setup tailored to your needs. We’ll then offer you a free savings analysis to benchmark and forecast your savings. See how to get started here.

7. Use Spot VMs to run interruptible AKS workloads

Azure Spot VMs are best suited for temporary workloads. These workloads must be able to handle interruptions, such as those that checkpoint frequently. If you have these, consider bidding for and getting AKS Spot VM capacity at up to 90% off pay-as-you-go rates.

What’s the catch, you ask? Well, Azure can reclaim this surplus capacity as soon as demand surges elsewhere (well, with a 30-second notice). Besides, capacity availability isn’t guaranteed.

8. Choose the right Azure pricing tier for the job

You choose between two paid Azure Kubernetes Service pricing options; Standard Tier and Premium Tier. Both are enterprise-grade, support up to 5,000 nodes per cluster, and offer financially backed SLAs.

However, the Premium tier offers superior reliability for mission-critical applications, including two years of Kubernetes updates support, comprehensive Microsoft support, and automatic monitoring and updates.

Now, compared to $0.10 per hour in the Standard tier, the extra reliability costs $0.60 per hour on Premium. It’s up to you to decide if you need more horsepower.

9. Use AKS cluster autoscaling

Dynamic autoscaling can enable your system to adjust the number of nodes in a cluster based on resource utilization. The goal here is to reduce idle resources while providing enough power when needed.

One way to implement dynamic autoscaling in AKS is to use Horizontal Pod Autoscaler (HPA). HPA automatically adjusts your number of pod replicas based on CPU utilization.

Another option is to use Cluster Autoscaler. This option scales the number of nodes in the cluster based on resource demand.

10. Take advantage of AKS Automatic

AKS Automatic comes pre-configured with Microsoft’s recommended best practices for running Kubernetes in production, including security, networking, monitoring, and logging configurations.

It also automates the setup and management of AKS clusters, handling tasks like node management, scaling, security, and upgrades. Rather than managing clusters, you can concentrate on your core functions, saving time and minimizing errors, thereby minimizing waste.

Yet, you can still access the Kubernetes API and customize the cluster when you need to. This offers a balance between ease of use and control.

11. Take advantage of AKS Node Pools

Use node pools with different VM sizes to meet applications’ needs, scaling infrastructure according to demand and optimizing costs. Examples of different VM sizes you can use in node pools include Standard_B2s, Standard_D2s_v3, and Standard_E2s_v3.

These VM sizes offer varying CPU, memory, and storage capabilities. The combinations can save you significant money while maintaining sufficient performance levels.

12. Release Unused Resources

Make it a habit to Identify and release unused resources, such as idle VMs or unattached disks, to avoid unnecessary expenses.

You can also configure your system to run at certain times, such as working hours or specific times of day or night.

This eliminates unnecessary expenses and frees up valuable resources for other projects, maximizing your return on investment (ROI).

13. Shift right

Embrace serverless offerings and Platform-as-a-Service (PaaS) to benefit from a pay-per-use model, reducing your AKA management costs.

Shifting right is similar to moving from a fixed-rate cell phone plan to one that charges only for the amount of data you use. You save money in the long run while still having access to the latest technology.

14. Shift left

Shifting cloud costs left refers to introducing cloud cost management processes earlier in the software development lifecycle. This is like how security and testing have shifted left in the DevOps pipeline.

Rather than dealing with the consequences later, you should empower your developers to manage cloud costs as they build new features and infrastructure.

This approach helps your team directly integrate cost visibility and controls into developer workflows. It also makes cloud costs a shared responsibility across engineering, finance, and FinOps teams – not just a finance thing.

The result: your engineers get to build more efficient and cost-effective solutions at the architectural level. And that supports ongoing efficiency and cost savings. Their cost awareness also makes it easier to work with finance and FinOps to prevent budget overruns.

However, most cloud cost optimization platforms do not deliver engineering-friendly cost optimization insights. That has changed.

Best AKS Cost Optimization Platform: How CloudZero Optimizes Your AKS Cost Like No Other Cost Platform

Yes, engineers love CloudZero for diverse reasons, including:

- Allocate 100% of your Kubernetes costs in Azure, regardless of tags or complex environment.

- Easily identify and understand the cost of each pod, node, cluster, namespace, and service. CloudZero is still the most accurate Kubernetes cost analysis tool available. Try us.

- View your per-unit costs in business terms, such as hourly Cost per Customer, per Environment, and per Team. Knowing the people, products, and processes driving your AKS costs makes it much easier to cut waste and invest where it pays off most.

- Receive engineering-optimized insights, such as Cost per deployment, per software feature, per request, per environment, per team, and more. Perfect for empowering your engineers to build and maintain cost-effective solutions.

- Take advantage of CloudZero’s real-time anomaly detection to catch and fix issues before they become costly problems.

A diverse range of ambitious brands, including Remitly, Drift, Malwarebytes, and Nubank, now use CloudZero to understand, control, and optimize their cloud costs. Upstart has also saved $20 million so far. In one year, most of our customers improved their Cloud Efficiency Rate by 33% and CloudZero pays for itself in about three months. You don’t have to take our word for it.  to see CloudZero in action for yourself.

to see CloudZero in action for yourself.